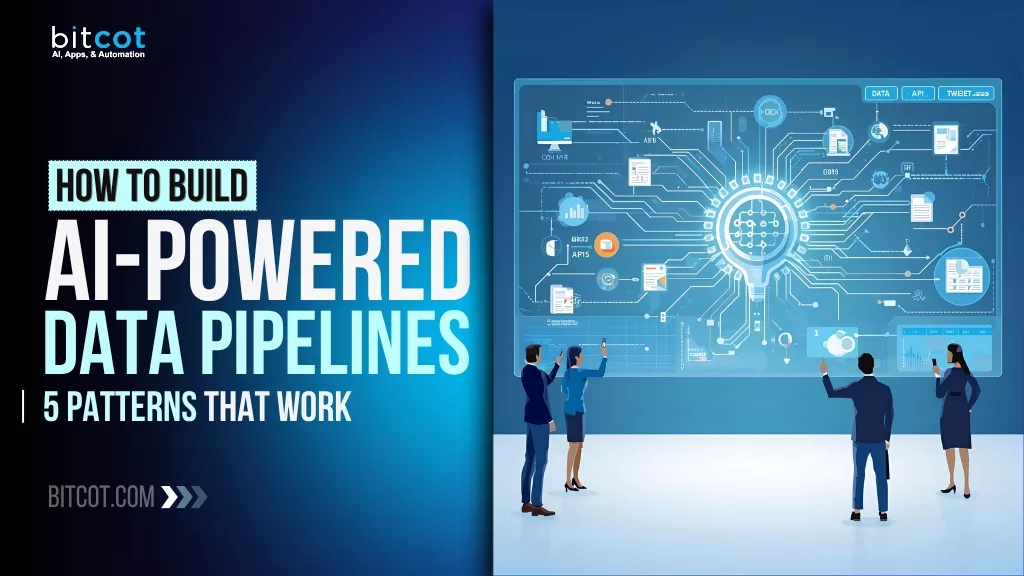

Your data is everywhere: streaming from apps, dashboards, CRMs, sensors, marketing tools, spreadsheets, and services your teams barely remember subscribing to.

And every part of the business expects those numbers to be clean, current, and instantly useful.

But when your data systems don’t connect, every analysis starts from scratch. Your team scrambles to merge files, fix broken pipelines, and explain why numbers don’t match, while the business loses confidence in the insights meant to guide decisions.

Sound familiar?

Today’s teams expect real-time, reliable, AI-ready data they can trust. When your pipelines can’t keep up, you slow down product launches, delay decisions, and miss opportunities hiding in the noise.

In this post, we’ll walk through the practical steps to build AI-powered data pipelines that actually work: five patterns you can model immediately, from real-time ingestion and vectorized enrichment to automated validation and feedback loops.

You’ll get clear examples and a simple blueprint you can apply right away.

Ask yourself:

- How many hours do you lose every week fixing data issues?

- How often does “AI” fail because the data isn’t ready?

- You already know these problems… but where are you now in solving them?

Whether you’re a data engineer, a technical leader, or someone responsible for delivering insights, the challenge is the same. Every broken pipeline means another missed chance to unlock value from your data.

AI-powered pipelines change that. They automate the messy parts, enrich your data in motion, and deliver trustworthy outputs your teams can rely on.

Bitcot helps you make that shift. We build custom AI data systems that streamline ingestion, automate quality checks, and power the analytics and models your business depends on.

The future of data engineering is already here. Are you ready to build pipelines that keep up?

What Are AI-Powered Data Pipelines?

AI-powered data pipelines are data workflows that incorporate machine learning and large language models directly into the process of collecting, transforming, and routing data.

Instead of relying solely on predefined rules, these pipelines use statistical models and AI reasoning to interpret what the data is and how it should be handled.

In a traditional pipeline, every step must be explicitly defined: how to parse a field, how to clean a column, how to match records, how to extract meaning from text. The pipeline follows the exact instructions given, and if the data changes in format or quality, the pipeline breaks. This creates a rigid system that works only when the input is perfectly consistent.

In contrast, an AI-powered pipeline embeds intelligence into these steps. When the pipeline encounters text, an AI model can infer intent, sentiment, categories, or relationships without needing a prewritten rule.

When it encounters numerical data, a model can understand normal patterns and detect values that do not fit. When documents arrive in PDFs or images, a model can extract and structure the information automatically.

The key idea is that the pipeline can make decisions based on learned patterns. It doesn’t need every possible variation to be anticipated ahead of time. For example, instead of defining explicit regex rules to clean product names coming from multiple systems, a model can recognize that “iPhone 15 Pro Max,” “iphone15 pro-max,” and “IP 15 PM” refer to the same product.

Instead of manually mapping thousands of customer support messages into categories, a language model can classify them based on their semantic meaning.

In essence, AI-powered data pipelines are not just sequences of extraction and transformation steps. They are dynamic systems where models analyze data, infer structure, recognize patterns, and apply complex transformations automatically.

The pipeline becomes partly self-interpreting: able to understand the nature of the data it processes, rather than simply applying predetermined operations.

Benefits of an AI-Powered Data Pipeline

AI-powered data pipelines bring a level of intelligence and flexibility that traditional pipelines cannot achieve.

By embedding machine learning and language models directly into the flow of data, the pipeline becomes capable of interpreting, validating, and transforming information in ways that previously required extensive manual work.

Improved Data Quality

AI models can spot unusual patterns, inconsistent formats, or values that fall outside expected ranges. Instead of relying on rigid rules, the pipeline uses learned behavior to detect and correct issues automatically. This means errors are caught as they appear, not after they reach dashboards or reports.

Less Manual Cleanup

Many repetitive tasks, such as categorizing text, extracting fields, normalizing labels, and matching similar records, become automated. The pipeline performs these operations consistently, reducing the need for engineers and analysts to intervene or repeatedly adjust logic when inputs change.

Unified Handling of Unstructured Data

AI makes it possible to process emails, chats, PDFs, logs, and other unstructured formats within the same pipeline as structured tables. Models can extract the important entities, sentiments, or key points and convert them into structured fields. This brings previously inaccessible data into the analytics and automation ecosystem.

Adaptability to Changing Inputs

When data sources evolve, such as new fields, new formats, and new terms, traditional pipelines often break. AI-powered pipelines can interpret these changes and adjust without requiring new rules for every variation. This adaptability keeps the pipeline stable even when upstream systems aren’t.

Ready for Advanced Use Cases

Because the pipeline produces clean, enriched, and well-structured outputs, the data becomes easier to use for analytics, automation, or downstream AI applications. Embeddings, classifications, and extracted entities are already generated as part of the flow, making the data immediately usable across the organization.

Together, these benefits create a pipeline that is not only more capable but also more aligned with the complexity and variability of modern data environments.

The Core Components of an AI-Powered Data Pipeline

An AI-powered data pipeline still contains the familiar stages of ingestion, transformation, and delivery, but each stage now includes intelligence that helps the system interpret and shape the data in real time.

Instead of functioning as a rigid sequence of scripted steps, the pipeline becomes a coordinated set of components that work together to understand both the structure and meaning of the information flowing through it.

Ingestion Layer

This is where data enters the system: streaming events, API calls, batch files, messages, documents, or logs. In an AI-driven pipeline, ingestion doesn’t simply collect raw inputs. It can also classify data types, detect formats automatically, route content to the right processing steps, and identify unstructured items (like text or PDFs) that need additional interpretation.

Pre-Processing and Parsing

Before data can be enriched or transformed, it must be interpreted. AI models at this stage can extract text from documents, convert speech to text, identify entities in messages, or detect the structure within loosely formatted data. This step replaces many brittle parsing rules and creates a clean intermediate representation for the rest of the pipeline.

AI/ML Enrichment Layer

This is the heart of the AI-powered pipeline. Models analyze the incoming data and generate meaningful outputs such as categories, tags, intents, summaries, anomaly scores, embeddings, or predictions. This layer turns raw inputs into semantically rich information that downstream systems can use immediately.

Transformation and Normalization

Traditional transformation steps still exist, such as joins, aggregations, conversions, and filters, but they are now guided or enhanced by AI-generated context. Model outputs help standardize names, match related records, infer missing values, and maintain consistent formats even as upstream data evolves.

Validation and Quality Checks

Instead of relying solely on static validation rules, AI-powered checks can compare current patterns to historical norms, detect subtle anomalies, or evaluate whether enriched outputs make sense. This provides dynamic quality control that adjusts as the data changes over time.

Storage and Routing

Once processed, data moves into warehouses, vector stores, databases, analytical tools, or application endpoints. AI can assist by deciding the best destination based on metadata, content type, or usage patterns, ensuring each piece of information ends up where it can be most useful.

Feedback Loop

A defining feature of AI-driven pipelines is their ability to learn from real-world interactions. Corrections, user feedback, and downstream performance inform model behavior, allowing the pipeline to refine future outputs without constant manual tuning. This creates a continuously improving system rather than a static one.

Together, these components form a pipeline that interprets data, enriches it with meaning, adapts to new patterns, and becomes more accurate the longer it runs.

5 Patterns Behind Effective AI-Powered Data Pipelines

AI-powered pipelines can take many forms, but the most reliable systems share a handful of repeatable patterns. These patterns define how data is collected, interpreted, enriched, and delivered, and they serve as design templates you can reuse across different use cases.

Each pattern solves a specific challenge that appears again and again in real-world data workflows.

1. Real-Time Ingestion With Adaptive Classification

In this pattern, incoming data is not only captured but also immediately interpreted. A language model or classifier identifies the type of content, such as a support message, a transaction event, or a product update, so the pipeline knows where it belongs. This eliminates the need for manual routing logic and keeps everything flowing smoothly as new data sources or formats appear.

2. Unstructured-to-Structured Conversion

Most companies deal with emails, PDFs, chat logs, and other messy formats that don’t fit neatly into tables. This pattern uses AI to extract entities, detect relationships, or summarize content into structured fields. It turns chaotic inputs into clean, usable data that can feed analytics, applications, or downstream models without requiring complex parsing rules.

3. AI-Augmented Transformation and Normalization

Here, AI supports the transformation layer by matching similar records, standardizing labels, correcting inconsistent values, and filling in missing information. Instead of manually maintaining long lists of mappings or cleaning rules, the model recognizes patterns and applies the most likely interpretation. This creates consistency even when upstream systems vary widely.

4. Embedding-Based Enrichment

In this pattern, the pipeline generates vector embeddings for text, products, users, or other entities. These embeddings capture meaning and similarity, enabling search, personalization, recommendations, and retrieval-based AI applications. The pipeline becomes capable of producing semantically rich data that traditional ETL systems cannot generate on their own.

5. Continuous Validation With Intelligent Alerts

Rather than relying on fixed thresholds or rules, this pattern applies anomaly detection and historical comparison to monitor data health. The pipeline learns normal behavior: volumes, distributions, formats, and relationships, and alerts the team when something deviates. This prevents subtle issues from propagating and makes the entire system more resilient to change.

How to Build AI-Powered Data Pipelines in 3 Phases

The future of data isn’t just about moving data; it’s about making that data smart. An AI-powered data pipeline goes beyond simple Extract, Transform, Load (ETL) or ELT.

It uses Machine Learning (ML) and Generative AI (GenAI) to automate complex tasks, proactively ensure data quality, and optimize the pipeline itself, ensuring your ML models are fed the freshest, highest-quality features possible.

Here’s a step-by-step guide to building your intelligent data pipeline.

Phase 1: Foundation and AI Goal Setting

A successful AI pipeline starts with a clear business goal and the data to support it.

- Define the AI Objective: What is the business outcome? Are you building a churn prediction model, a real-time recommendation engine, or a Retrieval-Augmented Generation (RAG) system for a chatbot? Your goal dictates the data latency, volume, and transformation needs.

Example: If the goal is real-time fraud detection, your pipeline must support low-latency streaming ingestion. - Establish Data Requirements: Identify all necessary data sources (databases, APIs, logs, streaming platforms). Critically, define your Data Quality Thresholds. What level of missing values or inconsistency is acceptable for your model to perform reliably?

- Design the Architecture: Choose an architecture that supports both your current and future needs. Modern AI pipelines often leverage a Lakehouse architecture (combining the best of data lakes and data warehouses) and are typically built on cloud-native, serverless, or highly scalable open-source technologies (like Spark).

Also Read: The AI-Native Data Stack: Building Systems That Think and Learn

Phase 2: Building the Intelligent Flow

This is where you integrate AI/ML capabilities into the traditional pipeline stages.

- Ingestion and Real-Time Validation: Set up connectors to pull data from your defined sources.

- AI Enhancement: Integrate ML models here to perform real-time anomaly detection. Instead of relying on static, hand-written rules, the model learns the “normal” pattern of incoming data and flags deviations like unexpected volume spikes or sudden shifts in value distribution (data drift).

- Smart Transformation and Cleansing: Transform raw data into a structured format, cleaning inconsistencies, and handling missing values.

- AI Enhancement: Use ML for intelligent imputation to fill in missing values using historical patterns, providing a smart guess rather than a simple zero or mean. Use GenAI to auto-suggest and even generate complex transformation scripts based on a natural language description of the required outcome.

- Feature Engineering and Feature Store: This is the most crucial step for ML model performance. Features are the input variables your model uses to make a prediction.

- AI Enhancement: Automate the calculation and update of complex features (e.g., a user’s purchase velocity over the last 30 minutes). Use a Feature Store to ensure that the features used for training are exactly the same as the features used for real-time inference (prediction), which prevents the critical issue of training-serving skew.

- Embedding and Vectorization (for GenAI/RAG): If your pipeline supports Large Language Models (LLMs) or RAG, this step is essential.

- AI Enhancement: Pass cleaned text data through an embedding model (e.g., from OpenAI or a cloud provider) to convert it into dense numerical vectors. Store these vectors in a Vector Database (e.g., Pinecone, Weaviate), enabling semantic search for your AI applications.

Phase 3: Orchestration and Monitoring

An AI pipeline needs smart management to run reliably at scale.

- Automated Orchestration and Deployment: Use an orchestration tool (like Apache Airflow, Prefect, or cloud-native services like Google Cloud Vertex AI Pipelines or AWS SageMaker Pipelines) to manage dependencies, scheduling, and scaling.

- AI Enhancement: Modern orchestrators use AI to predictively scale compute resources based on anticipated data loads and can offer self-healing capabilities, automatically retrying or rerouting failed tasks.

- End-to-End Monitoring and Feedback Loop: Continuous monitoring is essential for both the pipeline and the AI model.

- AI Enhancement: Monitor for Model Drift (when a model’s prediction accuracy degrades because the real-world data distribution has changed). Set up alerts to automatically trigger a retraining job using the latest prepared data, closing the loop and keeping your AI models fresh and accurate.

When these steps come together, you get a pipeline that doesn’t just move data; it interprets it, enriches it, and prepares it for advanced analytics and AI applications from the moment it arrives.

| Tool Category | Example Tools | AI Integration Focus |

|---|---|---|

| Orchestration | Apache Airflow, Prefect, Dagster | Workflow optimization, self-healing, predictive scaling. |

| Transformation | dbt, Databricks DLT, AWS Glue | Automated transformation generation, quality checks, schema drift handling. |

| Feature Store | Feast, SageMaker Feature Store | Training/Serving consistency, low-latency feature retrieval. |

| Vector DB | Pinecone, Weaviate, Qdrant | Storage and retrieval of high-dimensional data embeddings for RAG. |

Partner with Bitcot to Build Your AI-Powered Data Pipeline

While the vision of an intelligent data pipeline is exciting, the implementation can be complex, involving specialized expertise across data engineering, MLOps, and cloud architecture. This is where partnering with a skilled, experienced provider like Bitcot can accelerate your journey and guarantee a robust, production-ready system.

Bitcot specializes in transforming complex data requirements into scalable, adaptive AI solutions that deliver tangible business value.

Why Choose Bitcot for Your AI Pipeline?

- Holistic Strategy & Architecture: We don’t just write code; we start with your business outcome. Bitcot helps you define the optimal cloud-native architecture (AWS, Azure, or GCP) and select the right technologies (Lakehouse, Feature Store, Vector DB) tailored specifically for your target AI application (e.g., predictive analytics, GenAI RAG).

- Expertise in MLOps and Feature Engineering: Building a pipeline to train a model is one thing; building one that supports real-time inference at scale is another. Our MLOps specialists ensure seamless integration of your pipeline with your ML models, utilizing tools like Feature Stores to eliminate training-serving skew and maintain model accuracy in production.

- Focus on Data Quality and Governance: AI models are only as good as the data they consume. Bitcot embeds intelligent data quality checks, including AI-driven anomaly detection and automated schema validation, directly into the pipeline. We establish robust governance frameworks to ensure compliance and data reliability from ingestion to deployment.

- Accelerated Time-to-Value: Leveraging our deep industry experience and pre-built templates for common AI use cases (e.g., customer churn, forecasting, document intelligence), we significantly reduce the development time. This means you can move from proof-of-concept to a live, value-generating AI system faster.

Stop treating your data pipeline as a simple utility. Make it your strategic advantage.

Ready to build a data pipeline that not only moves data but also intelligently prepares it for your most critical AI initiatives?

Final Thoughts

Data isn’t getting any simpler.

New tools appear every quarter, teams add more sources than they retire, and everyone expects cleaner, faster, smarter insights than ever before. It’s easy to feel like you’re constantly patching systems instead of actually using your data to move the business forward.

AI-powered data pipelines offer a practical way out of that cycle. They let you work with the data you actually have, not the perfectly formatted data you wish you had. They adapt, they interpret, and they help you build a foundation that can keep pace with whatever your teams launch next.

If you’re just starting this journey, you don’t need to overhaul everything at once. Even one or two intelligent components, such as automated classification, unstructured-to-structured extraction, and embedding generation, can make your entire ecosystem feel lighter and more manageable. Step by step, you build a pipeline that thinks alongside you.

And if you’re looking for support, Bitcot can help you put these ideas into action. Whether you need guidance, implementation, or full modern data stack solutions, our team can design and build AI-driven pipelines tailored to your business.

Ready to make your data actually work for you? Let’s build it together.

Get in touch with our team.