Imagine deploying software 60% faster and cutting your infrastructure costs in half while your competitors still struggle with outdated systems.

It may sound ambitious, but this is the power of cloud-native applications. They are transforming how modern businesses build, scale, and innovate every day.

But here’s a question worth asking yourself:

If speed, scalability, and reliability define success today, why are so many teams still stuck in traditional development cycles?

Most businesses already understand the promise of cloud-native systems. Greater flexibility, automation, and cost efficiency are well-known advantages.

Yet many are still trapped by legacy infrastructure, slow deployments, or uncertainty about where to begin.

The problem isn’t awareness. It’s action. You already know these principles, but where are you now? Are your applications ready to scale as fast as your customers expect?

Whether you are a technical architect focused on performance, a non-technical founder looking to reduce costs, or a business owner aiming to innovate faster, the message is the same.

Waiting too long to modernize means watching competitors move ahead with smarter and more efficient systems. The longer you delay, the more difficult and expensive it becomes to catch up.

At Bitcot, we help businesses take that leap with confidence.

We design and build cloud-native solutions that deliver real, measurable results. Faster deployment, lower operational costs, and greater resilience are just the beginning.

Whether you are modernizing legacy systems or building something new, Bitcot helps you bridge the gap between where you are and where you need to be.

Now is the time to act. The future is not something to wait for. It is something to build today..

What Are Cloud Native Applications? Definition & Key Characteristics

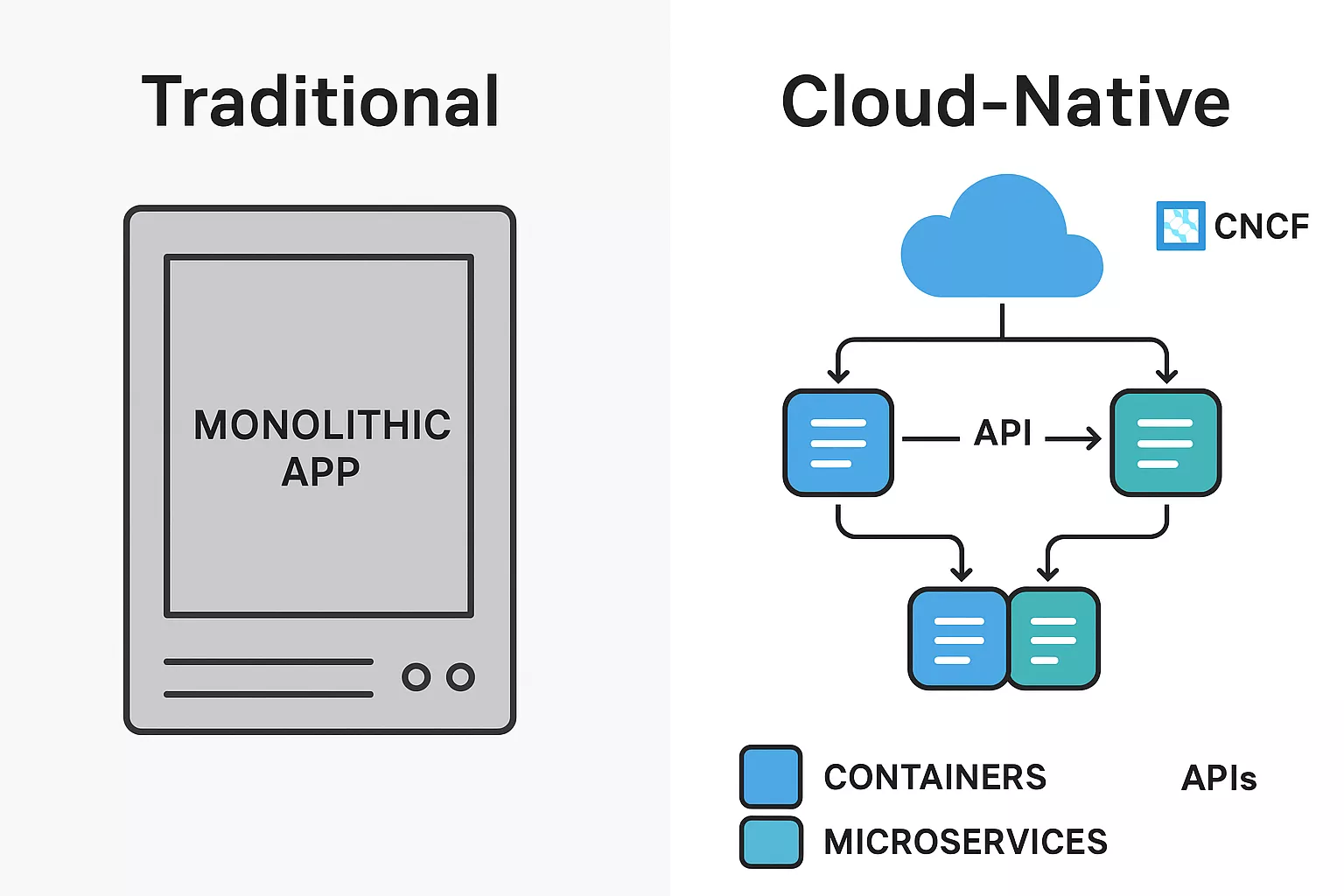

Cloud-native applications are software programs designed specifically to run in cloud computing environments. Unlike traditional applications migrated to the cloud, these are built from the ground up using cloud architecture principles.

The Cloud Native Computing Foundation (CNCF) defines this approach as using containers, microservices, and declarative APIs to build loosely coupled systems that are resilient, manageable, and observable.

Key characteristics include:

- Microservices architecture: Breaking down applications into smaller, independent services

- Containerization: Packaging applications with their dependencies for consistent deployment

- Dynamic orchestration: Automated management of application lifecycles

- DevOps integration: Continuous delivery and integration pipelines

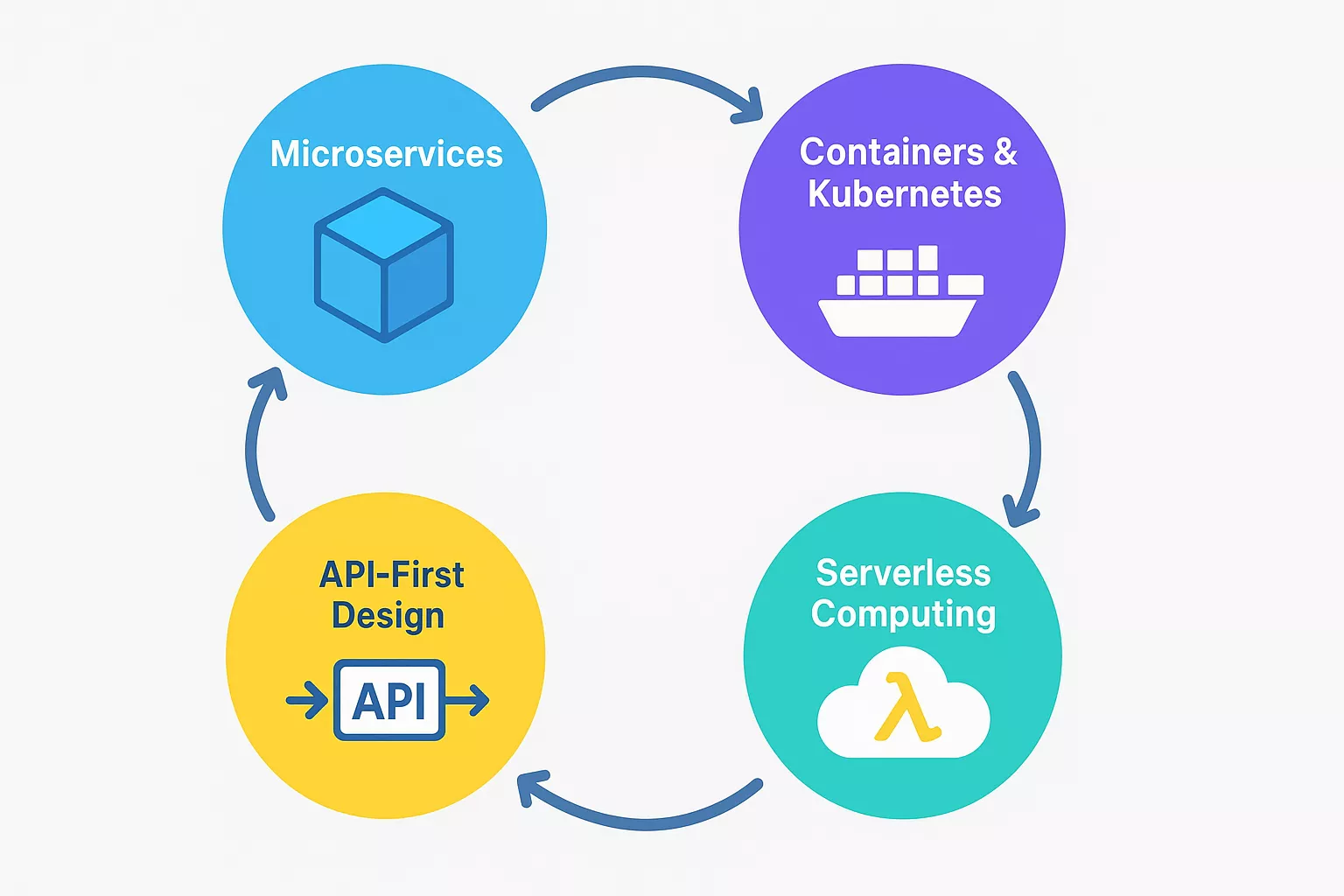

Core Components of Cloud Native Architecture Explained

Building these applications requires understanding four foundational elements that work together to create scalable, resilient systems.

Microservices

Microservices break monolithic applications into small, autonomous services. Each service handles a specific business function and communicates through APIs. This architecture enables teams to develop, deploy, and scale services independently.

Netflix pioneered this approach by decomposing their monolithic DVD rental platform into hundreds of microservices. This transformation allowed them to scale to over 200 million subscribers while maintaining 99.99% uptime.

Containers and Kubernetes

Containers package application code with dependencies, creating portable units that run consistently across environments. Docker dominates container technology, while Kubernetes orchestrates containerized applications at scale.

Kubernetes automates deployment, scaling, and management tasks. It handles load balancing, self-healing, and rolling updates without manual intervention.

Serverless Computing

Serverless architecture lets developers build applications without managing infrastructure. Cloud providers automatically allocate resources based on demand, charging only for actual usage.

AWS Lambda, Azure Functions, and Google Cloud Functions represent popular serverless platforms. These services execute code in response to events, scaling from zero to thousands of concurrent executions automatically.

API-First Design

These applications prioritize APIs as the primary interface between services. RESTful APIs and GraphQL enable seamless communication while maintaining service independence.

This approach supports integration with third-party services and creates opportunities for building ecosystems around your application.

Top Benefits of Cloud Native Application Development

This architecture delivers measurable advantages that directly impact business performance, from reduced costs to faster innovation cycles.

Scalability and Flexibility

These applications scale horizontally by adding more instances rather than upgrading hardware. This elasticity matches resource consumption with actual demand.

Spotify uses this architecture to handle 489 million users across 180+ markets. Their system automatically scales during peak hours and scales down during off-peak periods, optimizing costs.

Faster Time to Market

Continuous integration and continuous deployment (CI/CD) pipelines accelerate release cycles. Teams can push updates multiple times daily instead of monthly or quarterly releases.

Organizations adopting these practices ship features 200 times more frequently than competitors using traditional methods, according to the State of DevOps Report.

Cost Optimization

Pay-as-you-go pricing models eliminate upfront infrastructure investments. Auto-scaling prevents over-provisioning while ensuring adequate resources during traffic spikes.

Companies typically reduce operational costs by 30-50% within the first year of adoption through better resource utilization.

Enhanced Reliability

Distributed architecture prevents single points of failure. If one microservice crashes, others continue functioning normally. Kubernetes automatically restarts failed containers and redistributes workloads.

This resilience translates to higher availability. Applications routinely achieve 99.95% uptime or better.

Improved Developer Productivity

Microservices enable parallel development. Multiple teams work on different services simultaneously without conflicts. Containers ensure “it works on my machine” problems become rare.

Developers focus on writing code rather than managing infrastructure, increasing productivity by 35-40% on average.

Cloud Native Development Methods and Best Practices for Success

Implementing these proven methodologies and industry standards ensures your applications are built on solid foundations.

Adopt the Twelve-Factor App Methodology

The twelve-factor app methodology provides principles for building these applications:

- Codebase: One codebase tracked in version control, many deploys

- Dependencies: Explicitly declare and isolate dependencies

- Config: Store configuration in environment variables

- Backing services: Treat backing services as attached resources

- Build, release, run: Strictly separate build and run stages

- Processes: Execute the app as stateless processes

- Port binding: Export services via port binding

- Concurrency: Scale out via the process model

- Disposability: Maximize robustness with fast startup and graceful shutdown

- Dev/prod parity: Keep development, staging, and production similar

- Logs: Treat logs as event streams

- Admin processes: Run admin tasks as one-off processes

Implement Infrastructure as Code (IaC)

Infrastructure as Code defines infrastructure through code files rather than manual configuration. Tools like Terraform, AWS CloudFormation, and Azure Resource Manager automate infrastructure provisioning.

IaC benefits include version control, reproducibility, and faster disaster recovery. Teams can recreate entire environments in minutes using stored configurations.

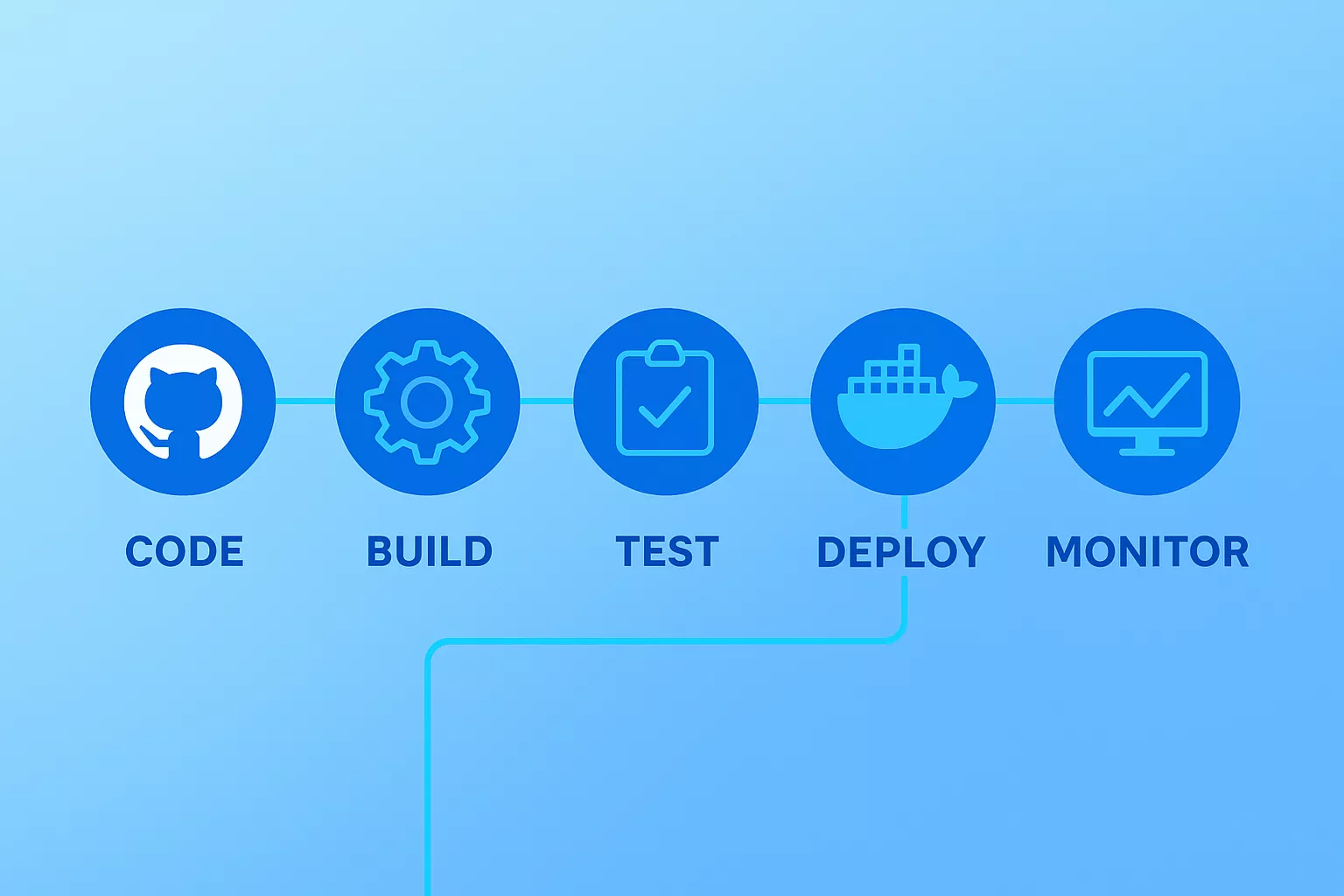

Establish CI/CD Pipelines

Automated pipelines test, build, and deploy code changes continuously. Jenkins, GitLab CI, CircleCI, and GitHub Actions integrate with cloud platforms to streamline releases.

Effective CI/CD pipelines include:

- Automated testing at multiple levels (unit, integration, end-to-end)

- Container image building and scanning for vulnerabilities

- Staged deployments with rollback capabilities

- Performance and security testing before production release

For teams looking to implement robust solutions, selecting the right tools and establishing proper automation workflows becomes critical for continuous delivery success.

Use Service Mesh for Microservices Communication

Service meshes like Istio, Linkerd, and Consul manage service-to-service communication. They provide traffic management, security, and observability without changing application code.

Key features include automatic load balancing, failure recovery, encryption, and detailed telemetry data for monitoring service health.

Implement Observability

Applications generate massive amounts of data across distributed components. Comprehensive observability requires three pillars:

- Logging: Collecting and analyzing application logs using ELK Stack or Splunk

- Monitoring: Tracking metrics with Prometheus and Grafana

- Tracing: Following requests through distributed systems using Jaeger or Zipkin

These tools identify performance bottlenecks, debug issues, and optimize resource allocation.

Design for Failure

Assume components will fail and build resilience into your architecture. Implement circuit breakers, retry logic, and timeouts to prevent cascading failures.

Netflix’s Chaos Engineering approach deliberately introduces failures to test system resilience. Their Chaos Monkey randomly terminates instances to ensure applications handle disruptions gracefully.

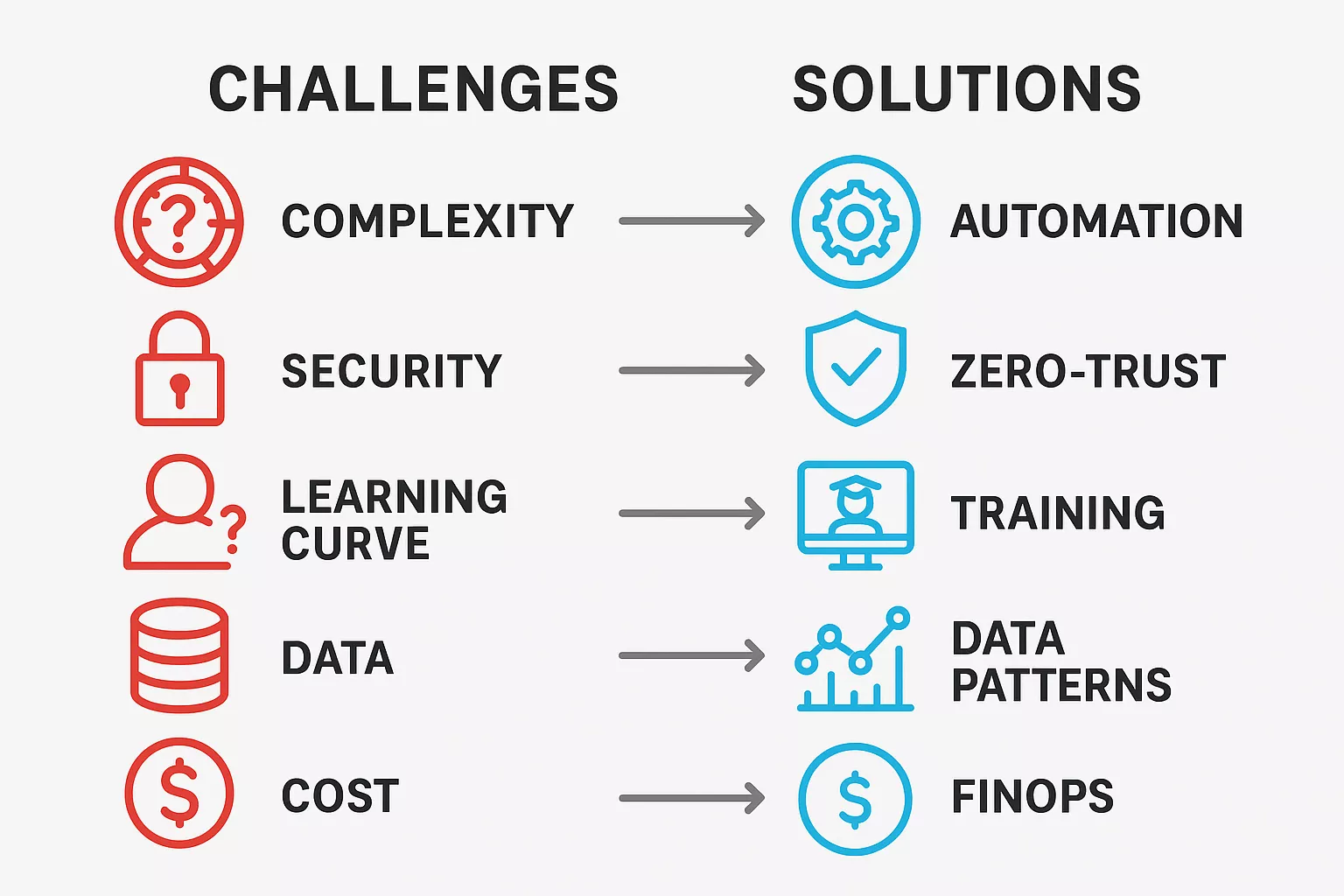

Common Challenges in Cloud Native Development & Solutions

While this approach offers significant benefits, organizations face several obstacles during adoption. Here’s how to overcome them.

Increased Complexity

Managing dozens or hundreds of microservices creates operational complexity. Each service requires monitoring, logging, and maintenance.

Solution: Adopt comprehensive observability tools and standardize service deployment patterns. Use Kubernetes operators to automate complex application management tasks.

Security Concerns

Distributed architectures expand attack surfaces. Each microservice, API, and container represents a potential vulnerability.

Solution: Implement zero-trust security models. Use mutual TLS for service communication, regularly scan containers for vulnerabilities, and apply least-privilege access controls. Tools like Vault manage secrets securely across services.

Learning Curve

These technologies require new skills. Teams familiar with monolithic architectures face steep learning curves with Kubernetes, containers, and microservices patterns.

Solution: Invest in training and start small. Pilot approaches with non-critical applications before migrating core systems. Partnering with experienced development companies like Bitcot accelerates the transition by providing expertise and guidance throughout the journey. Organizations can also leverage DevOps consulting services to bridge the knowledge gap and establish best practices from the start.

Data Management

Managing data consistency across distributed services challenges traditional database approaches. Transactions spanning multiple microservices require careful coordination.

Solution: Implement the Saga pattern for distributed transactions. Use event sourcing and CQRS (Command Query Responsibility Segregation) patterns when appropriate. Choose databases that match each microservice’s specific needs.

Cost Management

Without proper governance, cloud costs can spiral. Auto-scaling, if misconfigured, may provision excessive resources.

Solution: Implement FinOps practices. Use cloud cost management tools, set resource quotas, and regularly review spending. Tag resources for accurate cost allocation across teams and projects.

Real-World Cloud Native Application Examples from Industry Leaders

Leading companies across industries have successfully transformed their operations through these architectures. Here’s what they achieved.

Uber

Uber rebuilt their monolithic architecture into over 2,000 microservices. This transformation enabled them to scale globally while maintaining regional compliance and customization.

Their infrastructure processes 40 million rides daily, handling billions of transactions across their distributed system. Geographic load balancing ensures low latency for users worldwide.

Airbnb

Airbnb’s platform handles millions of booking requests during peak seasons. Their Kubernetes-based infrastructure automatically scales to meet demand spikes during holidays and events.

They use machine learning models deployed as containerized microservices to optimize search results, pricing recommendations, and fraud detection.

Capital One

Capital One migrated to this architecture to enhance security and accelerate innovation. They now deploy code changes thousands of times daily across their banking applications.

Their containerized infrastructure improved disaster recovery capabilities while reducing data center costs by 40%.

Spotify

Spotify processes 70,000 requests per second using these technologies. Their microservices architecture enables 200+ autonomous teams to develop features independently.

Container orchestration handles traffic distribution across global data centers, ensuring seamless playback for users regardless of location.

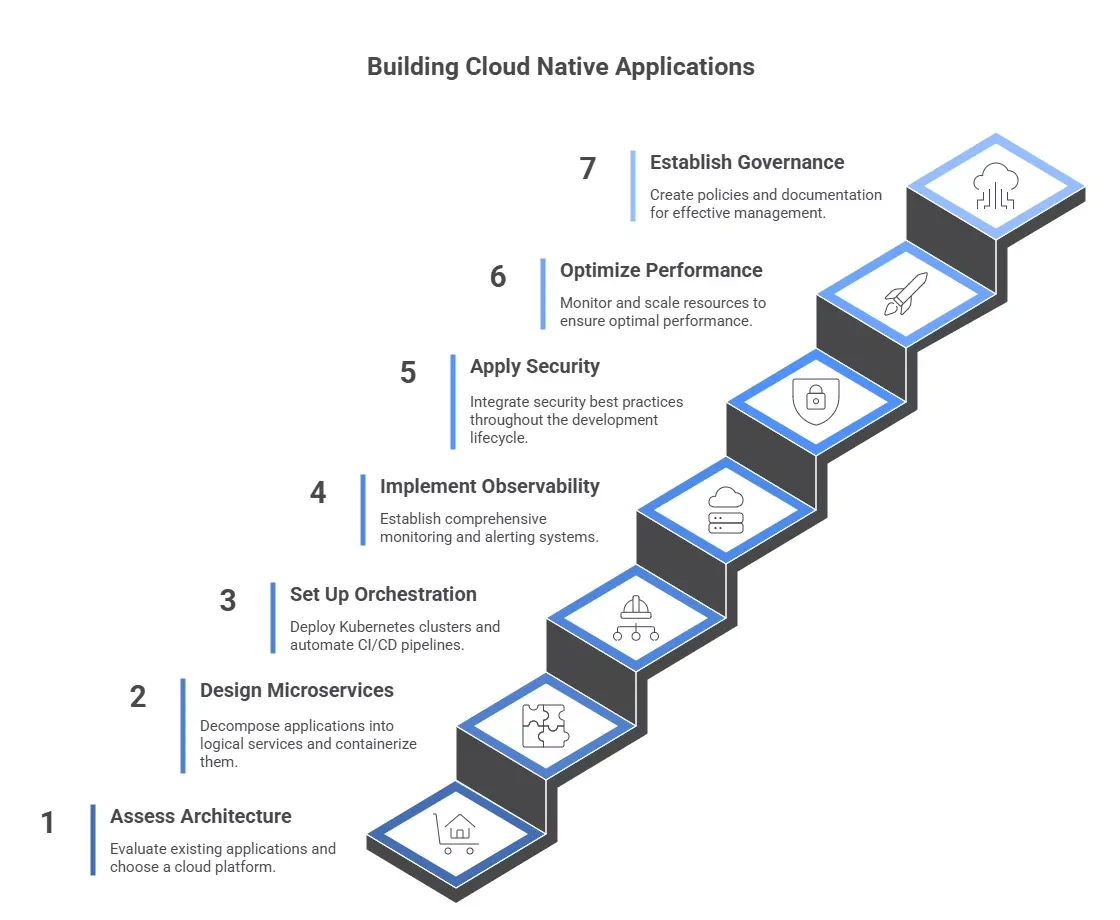

How to Build Cloud Native Applications in 7 Steps

Follow this comprehensive roadmap to transform your application architecture from planning through production deployment.

Step 1: Assess Architecture and Choose Cloud Platform

Evaluate existing applications to identify candidates for transformation. Not every application benefits from migration; focus on those requiring scalability, frequent updates, or improved reliability.

Document dependencies, data flows, and integration points. This analysis informs your migration strategy and helps prioritize efforts. Organizations with legacy systems should consider legacy application modernization strategies that align with these principles. Understanding what cloud migration involves helps teams prepare for the transformation journey ahead.

Select infrastructure providers based on requirements, budget, and existing expertise. Major options include:

- Amazon Web Services (AWS): Largest market share with comprehensive services

- Microsoft Azure: Strong integration with enterprise Microsoft environments

- Google Cloud Platform (GCP): Advanced data analytics and machine learning capabilities

- Multi-cloud: Combining multiple providers for redundancy or specialized features

Consider hybrid cloud approaches if regulatory requirements mandate on-premises components. Selecting the right cloud migration tools streamlines the transition process and reduces migration risks.

Step 2: Design Microservices Architecture and Containerize

Decompose monolithic applications into logical services aligned with business capabilities. Each microservice should:

- Have a single, well-defined responsibility

- Own its data and business logic

- Communicate via lightweight protocols (REST, gRPC, message queues)

- Deploy and scale independently

Use domain-driven design principles to identify service boundaries. Avoid creating too many microservices initially; start with coarser boundaries and refine based on operational experience. Understanding microservices architecture patterns helps teams make informed decisions about service decomposition.

Package each microservice into containers using Docker or similar technologies. Create Dockerfiles that specify application dependencies, environment configurations, and runtime requirements.

Optimize container images by:

- Using minimal base images (Alpine Linux, distroless containers)

- Implementing multi-stage builds to reduce image size

- Scanning images for vulnerabilities before deployment

- Versioning images properly for rollback capabilities

Step 3: Set Up Kubernetes Orchestration and CI/CD Pipelines

Deploy Kubernetes clusters to manage containerized applications. Configure:

- Namespaces: Logical separation for different environments or teams

- Deployments: Declarative application state management

- Services: Network endpoints for accessing microservices

- Ingress controllers: External traffic routing and load balancing

- ConfigMaps and Secrets: Configuration and sensitive data management

Consider managed Kubernetes services like Amazon EKS, Azure AKS, or Google GKE to reduce operational overhead. Teams new to Kubernetes can benefit from exploring comprehensive DevOps tools that integrate seamlessly with container orchestration platforms.

Automate build, test, and deployment processes. A typical pipeline includes:

- Code commit triggers pipeline execution

- Automated tests validate functionality

- Container images build and push to registries

- Security scans check for vulnerabilities

- Deployment to staging environment

- Automated integration and performance tests

- Approval gate for production release

- Rolling deployment to production

- Post-deployment validation

GitOps approaches using tools like ArgoCD or Flux enable declarative deployments where Git repositories represent the desired system state. Organizations looking to optimize their deployment pipelines can explore the DevOps infinity loop methodology for continuous improvement.

Step 4: Implement Observability and Monitoring

Establish comprehensive observability from day one. Deploy:

- Prometheus: Metric collection and alerting

- Grafana: Visualization and dashboards

- ELK Stack or Loki: Centralized log aggregation

- Jaeger or Zipkin: Distributed tracing

Define SLOs (Service Level Objectives) and SLIs (Service Level Indicators) for critical services. Configure alerts for threshold violations and anomalies.

Applications generate massive amounts of data across distributed components. These tools identify performance bottlenecks, debug issues, and optimize resource allocation.

Step 5: Apply Security Best Practices

Security must be integrated throughout the development lifecycle:

- Implement network policies to control pod-to-pod communication

- Use RBAC (Role-Based Access Control) for Kubernetes access

- Encrypt data in transit and at rest

- Scan dependencies and containers for known vulnerabilities

- Implement secrets management using Vault or cloud provider solutions

- Apply pod security policies to prevent privilege escalation

Regular security audits and penetration testing identify vulnerabilities before attackers exploit them. Implement zero-trust security models with mutual TLS for service communication.

Step 6: Optimize Performance and Scale Resources

Monitor application performance and resource utilization. Implement horizontal pod autoscaling based on CPU, memory, or custom metrics. Use cluster autoscaling to add nodes when demand increases.

Optimize costs by:

- Right-sizing containers based on actual usage

- Using spot instances for non-critical workloads

- Implementing pod disruption budgets for graceful scaling

- Scheduling batch jobs during off-peak hours

Test system resilience using chaos engineering approaches. Design for failure by implementing circuit breakers, retry logic, and timeouts to prevent cascading failures.

Step 7: Establish Governance, Compliance, and Documentation

Create policies for resource management, security, and compliance. Use Open Policy Agent (OPA) or similar tools to enforce organizational standards.

Document architecture decisions, runbooks for common operations, and disaster recovery procedures. Regular chaos engineering experiments validate resilience assumptions.

Establish FinOps practices to manage cloud costs effectively. Use cloud cost management tools, set resource quotas, and regularly review spending. Tag resources for accurate cost allocation across teams and projects.

Implement the Saga pattern for distributed transactions across microservices. Use event sourcing and CQRS patterns when appropriate. Choose databases that match each microservice’s specific needs for optimal data management.

Cloud Native Development Costs: Complete Investment Breakdown

Development investment varies based on application complexity, team size, and cloud provider selection. Here’s a comprehensive breakdown:

| Cost Category | Cost Range | Details |

| Infrastructure Costs | ||

| Compute instances | $0.05 – $0.50/hour | Per instance, varies by size and provider |

| Storage | $0.02 – $0.10/GB/month | Block, object, and database storage |

| Data transfer | $0.05 – $0.12/GB | Egress charges, inter-region transfers |

| Load balancers | $15 – $50/month | Per load balancer |

| Managed Kubernetes | $70 – $150/month | Control plane costs (EKS, AKS, GKE) |

| Development Costs | ||

| Developer training | $5,000 – $15,000 | Per developer for skills |

| CI/CD tools | $3,000 – $20,000/year | GitLab, Jenkins, CircleCI licenses |

| Development environment | $2,000 – $10,000/year | Per developer, including tools and IDEs |

| Consulting services | $150 – $300/hour | Expert guidance and architecture review |

| Operational Costs | ||

| Monitoring & logging | $500 – $5,000/month | Datadog, New Relic, ELK Stack |

| Security tools | $10,000 – $100,000/year | Vulnerability scanning, WAF, compliance |

| DevOps engineers | $100,000 – $150,000/year | Per engineer salary (US market) |

| Backup & disaster recovery | $1,000 – $10,000/month | Based on data volume and RPO/RTO |

| Cost Optimization | ||

| Reserved instances savings | 30% – 70% | Compared to on-demand pricing |

| Spot instances savings | 50% – 90% | For fault-tolerant workloads |

| Auto-scaling efficiency | 30% – 50% | Reduction in over-provisioning |

ROI Timeline

Most organizations achieve positive ROI within 12-18 months through:

- Infrastructure savings: 30-50% reduction in operational costs

- Faster releases: 200% improvement in deployment frequency

- Reduced downtime: 99.95%+ availability saves thousands in lost revenue

- Developer productivity: 35-40% efficiency gains accelerate feature delivery

Organizations working with experienced partners like Bitcot accelerate development while managing costs effectively through proven frameworks and reusable components. Leveraging digital transformation services can help businesses optimize their adoption strategy and maximize ROI.

Cloud Native vs Traditional Application Development: Key Differences

Understanding these fundamental distinctions helps organizations make informed decisions about their application architecture strategy.

| Aspect | Traditional Applications | Cloud-Native Applications |

| Architecture | Monolithic, tightly coupled components | Microservices, loosely coupled services |

| Deployment | Manual, lengthy release cycles (monthly/quarterly) | Automated CI/CD, multiple releases daily |

| Scalability | Vertical scaling (upgrade hardware) | Horizontal scaling (add instances) |

| Downtime | Required for updates and maintenance | Zero-downtime rolling updates |

| Resilience | Single point of failure, complete system outage | Isolated failures, partial service degradation |

| Resource Usage | Over-provisioned, fixed capacity | Dynamic auto-scaling, pay-per-use |

| Time to Market | Months for new features | Days to weeks for feature release |

| Infrastructure | On-premises or IaaS | Cloud-native PaaS/serverless |

| Cost Model | High upfront CapEx investment | OpEx model with variable costs |

| Development Teams | Centralized, sequential workflows | Distributed, parallel development |

Future Trends in Cloud Native Development for 2025 and Beyond

Emerging technologies and evolving practices are shaping the next generation of these applications. Stay ahead with these innovations.

WebAssembly (Wasm)

WebAssembly extends beyond browsers to server-side applications. Wasm provides faster startup times and better performance than traditional containers, making it ideal for edge computing and serverless workloads.

AI and Machine Learning Integration

Platforms increasingly incorporate AI capabilities. Automated optimization, predictive scaling, and intelligent monitoring improve efficiency and reliability without manual intervention.

Edge Computing

Processing data closer to users reduces latency and bandwidth costs. Architectures extend to edge locations, creating distributed systems that span cloud and edge environments.

FinOps Maturity

As cloud adoption grows, organizations prioritize cost optimization. Advanced FinOps practices including automated cost allocation, real-time optimization, and predictive budgeting become standard.

Policy-as-Code

Infrastructure as Code extends to compliance and governance. Policy-as-code tools automatically enforce security, compliance, and operational standards across cloud environments.

Getting Started with Cloud Native Development

Building these applications requires careful planning, the right tools, and often, expert guidance. Start with a pilot project to gain experience before tackling critical systems.

Invest in team training and establish clear architectural standards. Adopt proven patterns and leverage existing frameworks rather than reinventing solutions. For businesses with existing applications, legacy application migration to cloud requires a strategic approach that balances modernization with business continuity.

The journey delivers transformative benefits: improved agility, reduced costs, and enhanced user experiences. Organizations that embrace these principles position themselves for sustained competitive advantage in increasingly digital markets. Comprehensive legacy system modernization and migration services can help businesses navigate this transformation effectively.

Whether you’re modernizing legacy systems or building new applications from scratch, this architecture provides the foundation for scalable, resilient software that adapts to changing business needs. For organizations requiring enterprise application development with these capabilities, working with experienced partners ensures successful implementation.

Ready to accelerate your transformation? Expert guidance makes the difference between smooth adoption and costly mistakes. Partner with a trusted cloud application development company to leverage proven methodologies and best practices.