An AI-powered healthcare platform that leverages multi-agent coordination to automate complex tasks, streamline workflows, and enhance both operational efficiency and patient care.

Building the Intelligent Enterprise. From Cloud to Agent Orchestration

We turn LangChain and Generative AI pilots into production-grade agent workflows, integrating tools, data, and infrastructure for the last mile.

Need AI agents that can reason? make decisions? use tools?

We build custom LangChain agents capable of multi-step workflows, API calls, data processing, and autonomous task execution.

How does it work?

Query

You start with a question/ task

Ranking

We rank our search results to give best context to to the LLMs

Similarity Search

Let’s start looking in our vector database to find anything related to our query

LLMs

We input the information we gathered from the vector database into the LLaMa. Then we combine that input with the query and prompt.

Generated Output

We after all that information is inputted, it generates a coherent response for the user.

Our LangChain Accelerator

Build reliable, scalable AI applications

- Production-Ready RAG Frameworks

- Optimized Prompt & Chain Libraries

- Built-In Evaluation & Guardrails

- Scalable Vector Database Architecture

- Deployment & Infrastructure Blueprints

Our Proven AI & LangChain Technology Stack

Our Featured work

Autonomous HR Service Desk

Autonomous HR Service Desk

An AI-driven platform that automates HR operations by seamlessly orchestrating specialized agents to handle policy-related queries, generate legal documents, and update job descriptions—ensuring fast, compliant, and autonomous employee experiences.

Auto-CRM Sales Assistant

Auto-CRM Sales Assistant

An AI-powered Sales Assistant that streamlines field sales workflows by automating visit preparation, real-time client interaction handling, and post-visit data entry, empowering sales reps to focus on relationship-building while ensuring data accuracy and CRM automation.

Our Custom LangChain Development Services

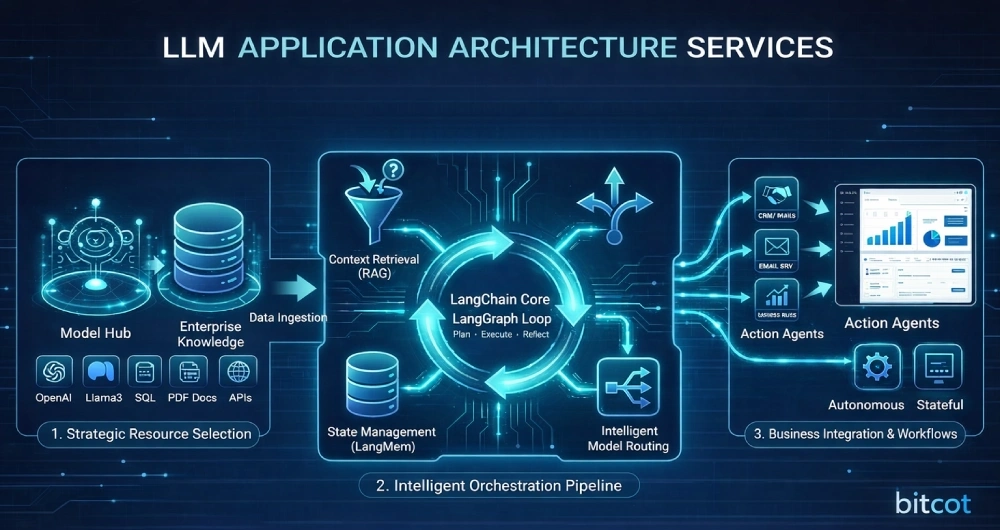

- LLM Application Architecture Services

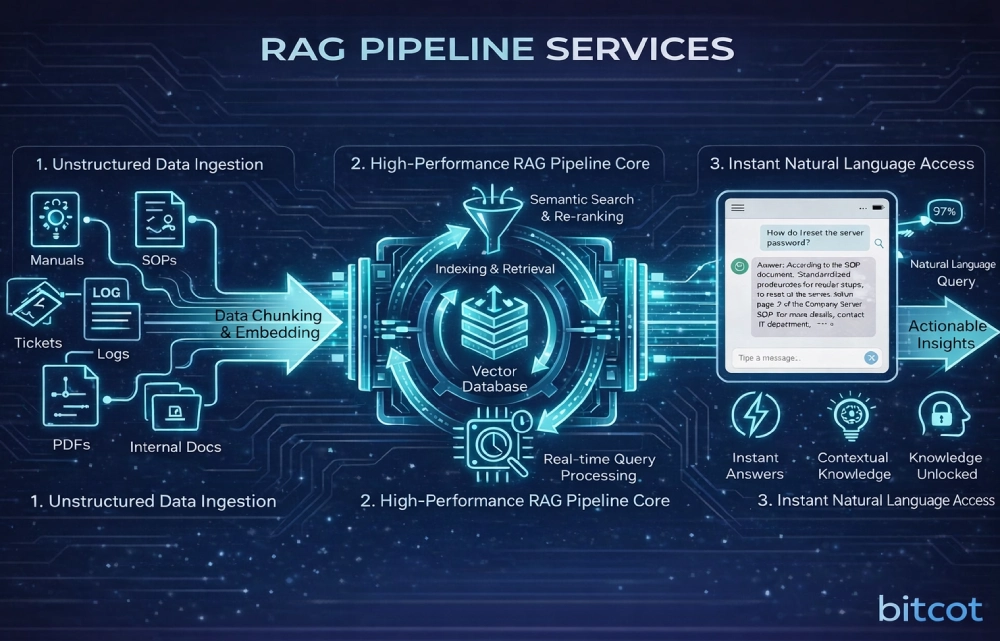

- RAG Pipeline Services

- Agentic Workflow Services

- Fine-Tuning Services

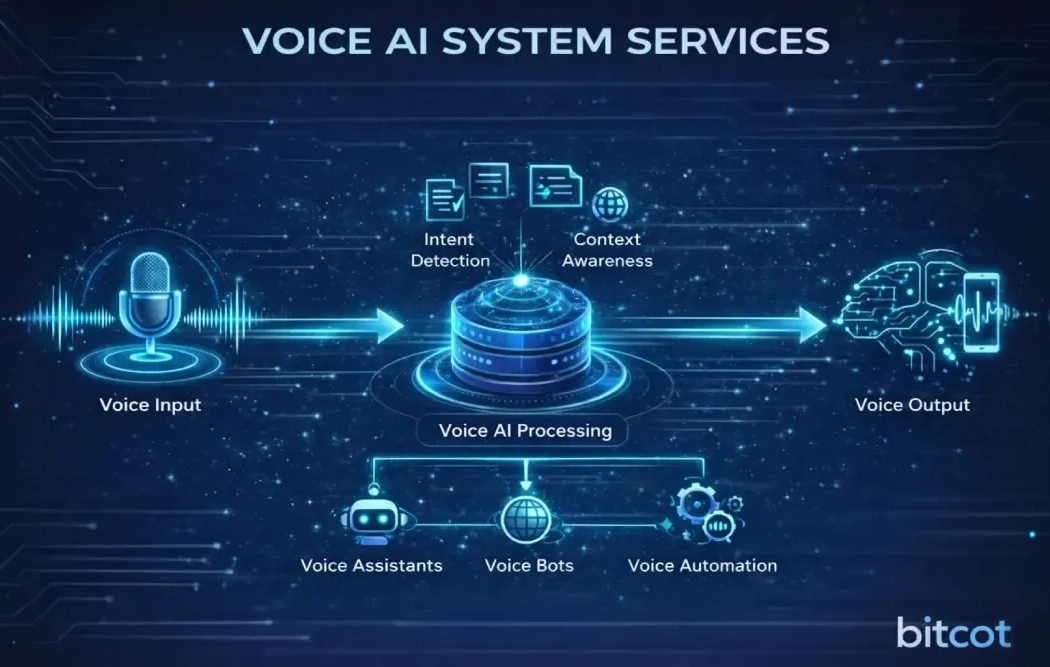

- Voice AI System Services

From model selection to LangChain orchestration patterns, we design end-to-end LLM application architectures that align AI capabilities with real business workflows.

We architect high-performance RAG pipelines that make unstructured data, manuals, SOPs, tickets, logs, PDFs, and internal documentation, instantly accessible through natural language.

We build LangChain-powered agents that can reason through complex tasks, use tools, call APIs, and execute multi-step workflows autonomously.

For specialized industries, general-purpose models aren’t enough. We fine-tune compact language models to understand your terminology, workflows, and edge cases.

We develop voice-enabled AI agents that combine speech recognition, language models, and action workflows, all orchestrated through LangChain.

Prebuilt platform features that drive massive adoption

150

360

20

Why Choose Our LangChain Development Company

Deep LangChain Expertise

We build advanced solutions, from intelligent chatbots to complex multi-agent systems. We specialize in chains, agents, tools, memory, and vector stores.

End-to-End AI Development

From strategy to deployment, we deliver production-ready AI systems with robust backends, secure data pipelines, and seamless user experiences.

Seamless Integration with the Stack

Whether you need to connect LLMs with internal databases, CRMs, APIs, or proprietary tools, our solutions are designed to fit into your existing ecosystem.

Performance & Scalability First

Applications must be fast, reliable, and cost-efficient. We optimize prompt flows, caching strategies, and retrieval pipelines so your system performs at scale.

FAQs

What is "RAG" and does LangChain support it?

RAG stands for Retrieval-Augmented Generation. It’s a technique where the AI retrieves information from your private documents (PDFs, databases, Notion) before answering a question. LangChain is the industry standard for building RAG pipelines, offering built-in tools to load, split, and search your data easily.

Does LangChain send my data to third parties?

LangChain itself is a code library, not a service that stores your data. However, it connects to LLM providers (like OpenAI or Anthropic) and vector databases (like Pinecone). Your data flow depends on the integrations you choose to configure. If you use local models (e.g., via Ollama), your data never leaves your infrastructure.

What can I build with LangChain?

The possibilities are endless, but common use cases include:

- Chatbots that “talk” to your PDF documents or company wiki.

- Customer Support Bots that can look up order status in a database.

- Coding Assistants that help debug code.

- Research Agents that browse the web and summarize news.

Can LangGraph handle "Human-in-the-loop" workflows?

Yes, this is a core feature. You can configure LangGraph to pause execution at specific “breakpoints” (e.g., before sending an email or executing a financial transaction) to wait for human approval before proceeding.

What is the difference between LangChain and LangGraph?

Think of LangChain as a library for building linear, direct sequences of steps (DAGs)—great for simple chains. LangGraph is an extension built on top of LangChain specifically designed for building stateful, cyclic agents. If your application needs loops (e.g., “ask the user for clarification until satisfied”) or complex decision-making, you need LangGraph.

Can I use LangSmith if I'm not using LangChain?

Yes! While it integrates natively with LangChain, LangSmith can be used to trace and monitor applications built with any LLM framework (like the OpenAI SDK or LlamaIndex) using its standalone SDK.

How does RAG prevent AI "hallucinations"?

Hallucinations often happen when an LLM guesses an answer it doesn’t know. RAG fixes this by forcing the model to look at your specific data (PDFs, Company Policies) first. It feeds that data to the model as context, essentially telling the AI: “Use only this information to answer the question.”

What is "Hybrid Search" in RAG?

Simple RAG uses “Semantic Search” (matching concepts). Hybrid Search combines this with “Keyword Search” (matching exact words). This is crucial for specific queries—for example, searching for “Project Alpha” (keyword) specifically within “Q3 Financial Reports” (semantic).

Do I need a Vector Database for RAG?

Generally, yes. Vector databases (like Pinecone, Weaviate, or pgvector) store your data as numbers (embeddings) that the AI can “read” mathematically. LangChain integrates with over 50 different vector stores, so you can likely use one you already prefer.

How do you ensure the AI gives accurate, trustworthy answers?

We use Retrieval-Augmented Generation (RAG), evaluation pipelines, and guardrails to ground responses in your actual data. Our systems are designed to cite sources, reduce hallucinations, and continuously improve through monitoring and feedback loops.

Can your AI systems integrate with our existing software and databases?

Yes. We specialize in integrating LangChain applications with CRMs, ERPs, internal APIs, databases, and third-party platforms. This allows AI agents to not only answer questions but also take actions like updating records, creating tickets, or triggering workflows.

Is our data secure when using LangChain-based AI systems?

Security is built into every solution we develop. We implement access controls, data encryption, secure API handling, and permission-aware retrieval so sensitive information is only accessible to authorized users and systems.

Do you build prototypes or production-ready systems?

Our team does both, but our focus is on production. We can rapidly prototype to validate ideas, then transition to scalable, monitored, and optimized deployments that are reliable in real-world environments.