Let’s be honest: building a RAG (Retrieval-Augmented Generation) prototype is no longer a competitive advantage; it’s a weekend project. In the current AI landscape, anyone can string together a basic chatbot that “talks” to a few company PDFs.

The real challenge, and where most enterprise AI initiatives stall, is the “Production Gap.”

There is a massive difference between a demo that works on a founder’s laptop and an enterprise-grade AI engine that handles thousands of global queries, maintains strict data security, and delivers sub-second responses.

For a business leader, the stakes of moving too fast are high: high latency kills user adoption, and “hallucinations” (AI-generated inaccuracies) can lead to significant brand damage or legal liability.

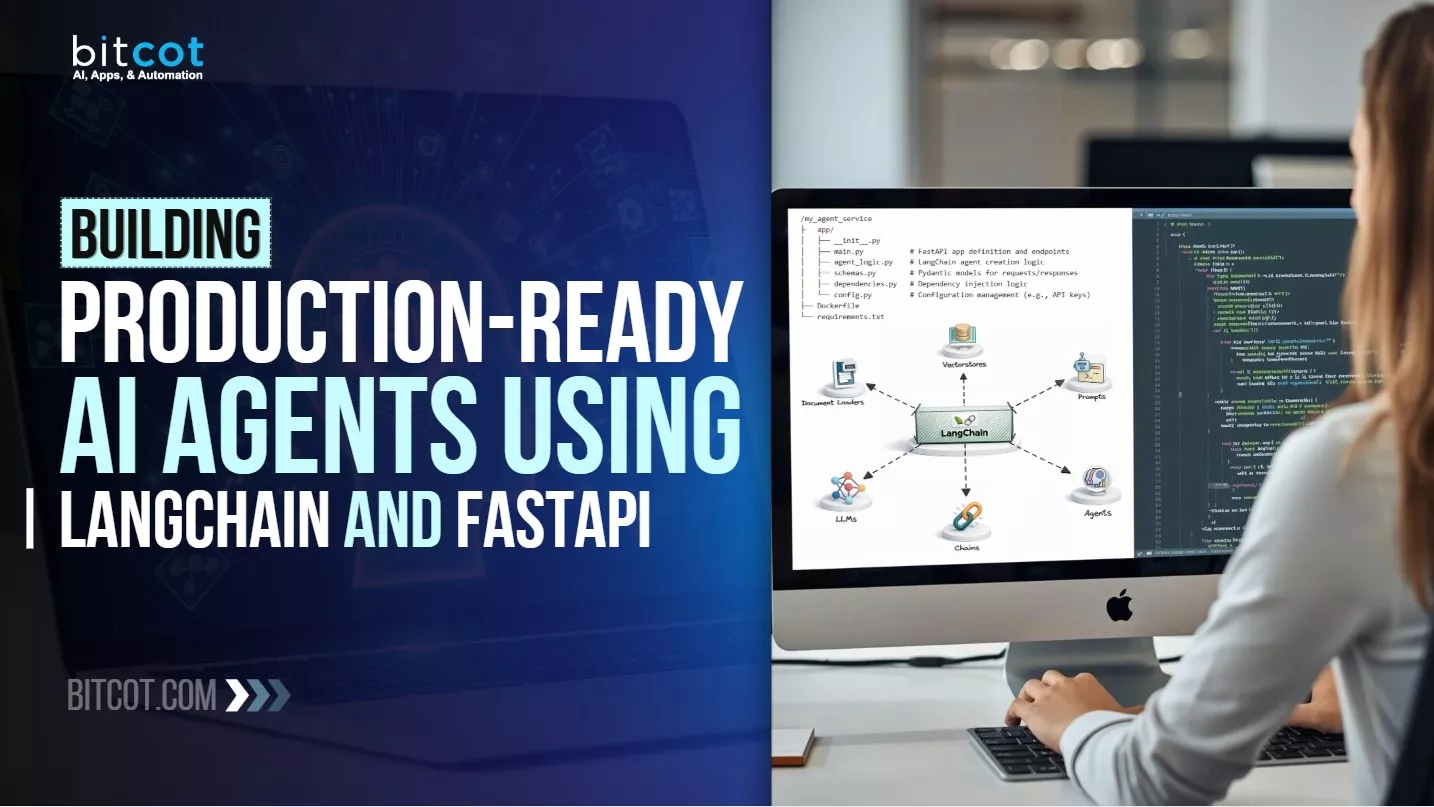

If you are looking to move beyond the pilot phase, your choice of architecture is the most critical decision you will make. This guide focuses on the “Golden Stack” for professional AI deployment: LangChain and FastAPI.

- LangChain acts as your orchestration layer, ensuring your AI logic is modular, transparent, and capable of handling complex business workflows.

- FastAPI serves as your high-performance delivery layer, providing the asynchronous speed and “enterprise-ready” security protocols required to scale your product to a global user base.

This isn’t a tutorial on how to write snippets of code. This is a strategic roadmap for building reliable, scalable, and secure AI infrastructure. We will explore how to transform RAG from a promising experiment into a robust, revenue-driving asset for your organization.

Key Takeaways: Production-Ready RAG (2026)

- The “Production Gap” Logic: Transitioning from a demo to an enterprise-grade engine requires shifting from “mostly works” to consistent reliability. Success in 2026 is defined by an agent performing correctly 10,000 times consecutively, prioritizing sub-2-second latency and <1% hallucination rates.

- The Golden Stack (Architecture): The industry standard for high-concurrency deployment is LangChain (the orchestration “brain”) paired with FastAPI (the high-performance “engine”). This stack enables Asynchronous Efficiency, allowing systems to handle thousands of global queries without bottlenecking.

- Precision and Governance: Mitigate risk by implementing Pydantic for rigid data validation, LangGraph for self-correcting agentic loops, and Semantic Chunking to preserve document context. These safeguards ensure AI outputs are mathematically grounded in your proprietary data.

- Strategic ROI & Efficiency: Beyond automation, production RAG delivers measurable business impact, typically achieving a 60% reduction in Tier-1 support tickets and a 30% acceleration in complex compliance and legal review cycles.

- Vendor Agility & Future-Proofing: A modular, model-agnostic architecture prevents vendor lock-in. This allows enterprises to swap LLM providers (e.g., from OpenAI to Anthropic) in minutes, potentially reducing future re-engineering costs by 40%.

- Human-in-the-Loop (HITL) Guardrails: Maintain oversight by automating 90% of workflows while requiring human verification for high-value or high-risk actions, effectively balancing scale with corporate responsibility.

What Makes a RAG Application Production-Ready?

Moving a RAG (Retrieval-Augmented Generation) system from a successful pilot to a live environment is the most difficult stage of the AI lifecycle.

For a business leader, “production-ready” means more than just functional code. It signifies a system that is reliable, scalable, and safe enough to represent your brand to customers or employees.

To transition out of the prototype phase, your application must meet four specific enterprise pillars.

Accuracy and Grounding (The Trust Factor)

A prototype that is “usually right” is a liability in a business context. Production-ready RAG requires a rigorous evaluation framework to eliminate hallucinations.

This involves implementing a process where the AI is strictly grounded in your proprietary data. If the answer isn’t in the provided documents, the system must be programmed to admit it doesn’t know, rather than guessing.

Low Latency at Scale

User experience is dictated by speed. While a 10-second wait for a response might be acceptable during a board room demo, it will lead to zero adoption in the real world.

A production-ready architecture uses asynchronous processing to handle multiple user requests simultaneously. By leveraging tools like FastAPI, you ensure the system remains responsive even as your user base grows from ten people to ten thousand.

Data Security and Governance

Enterprise applications must adhere to strict data privacy standards. Production readiness means having a clear “chain of custody” for your data. This includes:

- Role-Based Access Control (RBAC): Ensuring a user only retrieves information they are cleared to see.

- Data Encryption: Protecting sensitive information both at rest and in transit.

- Audit Trails: Maintaining logs of what data was accessed and which model generated the response.

Observability and Feedback Loops

Unlike traditional software, AI performance can “drift” over time. A production system must include monitoring tools that track the quality of both the retrieval (did we find the right documents?) and the generation (did the LLM summarize them correctly?).

This allows your team to identify and fix bottlenecks or accuracy drops before the end-user notices them.

Why Basic RAG Fails: Building Scalable Enterprise AI for Production

For business leaders, the appeal of Retrieval-Augmented Generation (RAG) is clear: it promises to turn proprietary data into a competitive advantage without the massive expense of training a custom AI model.

However, there is a significant “performance gap” between a successful pilot and a tool that employees can actually rely on.

In a prototype, a 60% accuracy rate feels like magic. In a production environment, that same 40% failure rate represents a liability.

Understanding why simple RAG systems struggle is the first step toward building a tool that delivers genuine ROI.

1. The Accuracy Wall: Similarity Is Not Relevance

The most common mistake is assuming that because two pieces of text are “mathematically similar,” one must contain the answer to the other.

- The Business Risk: A basic RAG system might retrieve a 2023 product manual when a user asks about a 2026 feature simply because the language is similar.

- The Reality: Without advanced filtering, your AI may confidently present outdated or irrelevant information as “the truth,” leading to costly operational errors.

2. Context Fragmentation and “Data Blindness”

Simple RAG breaks your documents into small, disconnected “chunks” to fit them into the AI’s memory. While efficient, this often strips away the context necessary for a correct answer.

- The Example: If a financial figure is located in a table, a basic system might retrieve the number but lose the column header that explains what that number represents.

- The Result: Your leadership team receives data that is technically accurate but contextually wrong, which is often more dangerous than having no data at all.

3. The Problem of “Noisy” Data

In a corporate environment, data is messy. It lives in nested folders, complex spreadsheets, and legacy PDFs. Simple RAG pipelines are often “noise-sensitive.”

When the system pulls in five different documents to answer a query, and three of them are tangentially related fluff, the AI’s reasoning capability degrades. This is known as information retrieval overload, where the signal is lost in the noise.

Key Insight: Moving from a demo to production isn’t about getting a better LLM; it’s about building a more sophisticated “data retrieval” engine that understands business logic, not just word patterns.

Benefits of Using LangChain and FastAPI for Enterprise-Grade RAG

While basic scripts fail under the weight of real-world data, the combination of LangChain and FastAPI has emerged as the industry standard for 2026. This duo bridges the gap between a fragile experimental pilot and a robust, scalable corporate asset.

For business leaders, this stack isn’t just about code; it is about speed to market, risk mitigation, and operational scalability.

LangChain: The “Operating System” for AI Logic

LangChain provides the scaffolding that makes AI predictable. Instead of writing custom code to connect every database and model, LangChain offers a modular library of “primitives.”

- Vendor Agility: LangChain allows you to swap your underlying Large Language Model (e.g., switching from OpenAI to Anthropic or a private Llama-3 instance) without rewriting your entire application. This prevents vendor lock-in, a critical concern for long-term enterprise strategy.

- Built-in Governance: Through components like LangSmith, the framework provides “observability.” You can audit exactly why the AI gave a specific answer, which document it cited, and where the logic broke down. This transparency is essential for compliance and quality control.

- Complex Reasoning (Agents): Beyond simple Q&A, LangChain enables “Agentic RAG.” This allows the system to realize when it doesn’t have enough information and autonomously decide to search a different database or use a calculator to verify a financial figure.

FastAPI: The High-Performance Gateway

If LangChain is the “brain,” FastAPI is the “nervous system.” It is a modern web framework designed specifically for the high-concurrency needs of AI applications.

- Asynchronous Efficiency: AI models often take several seconds to generate a response. Traditional frameworks might “freeze” while waiting, but FastAPI’s async/await architecture allows it to handle thousands of concurrent users simultaneously without a drop in performance.

- Automatic Data Validation: Using Pydantic, FastAPI ensures that every piece of data entering or leaving your system follows strict rules. This significantly reduces “garbage in, garbage out” errors and strengthens your application’s security posture against prompt injection or data leaks.

- Instant Documentation: FastAPI automatically generates interactive API documentation (Swagger UI). This allows your internal IT teams and front-end developers to integrate the AI service into your existing corporate dashboard in hours rather than days.

| Business Priority | How This Stack Delivers |

| Cost Efficiency | High concurrency in FastAPI means you need fewer servers to handle more users, lowering cloud infrastructure bills. |

| Security | Pydantic models in FastAPI provide a robust layer of defense, while LangChain’s modularity allows for local, private data hosting. |

| Reliability | LangChain’s “Chains” create repeatable, auditable workflows that move away from “unpredictable magic” toward structured business logic. |

| Future-Proofing | Both frameworks have massive community support in 2026, ensuring a steady pool of engineering talent and a roadmap for upcoming AI advancements. |

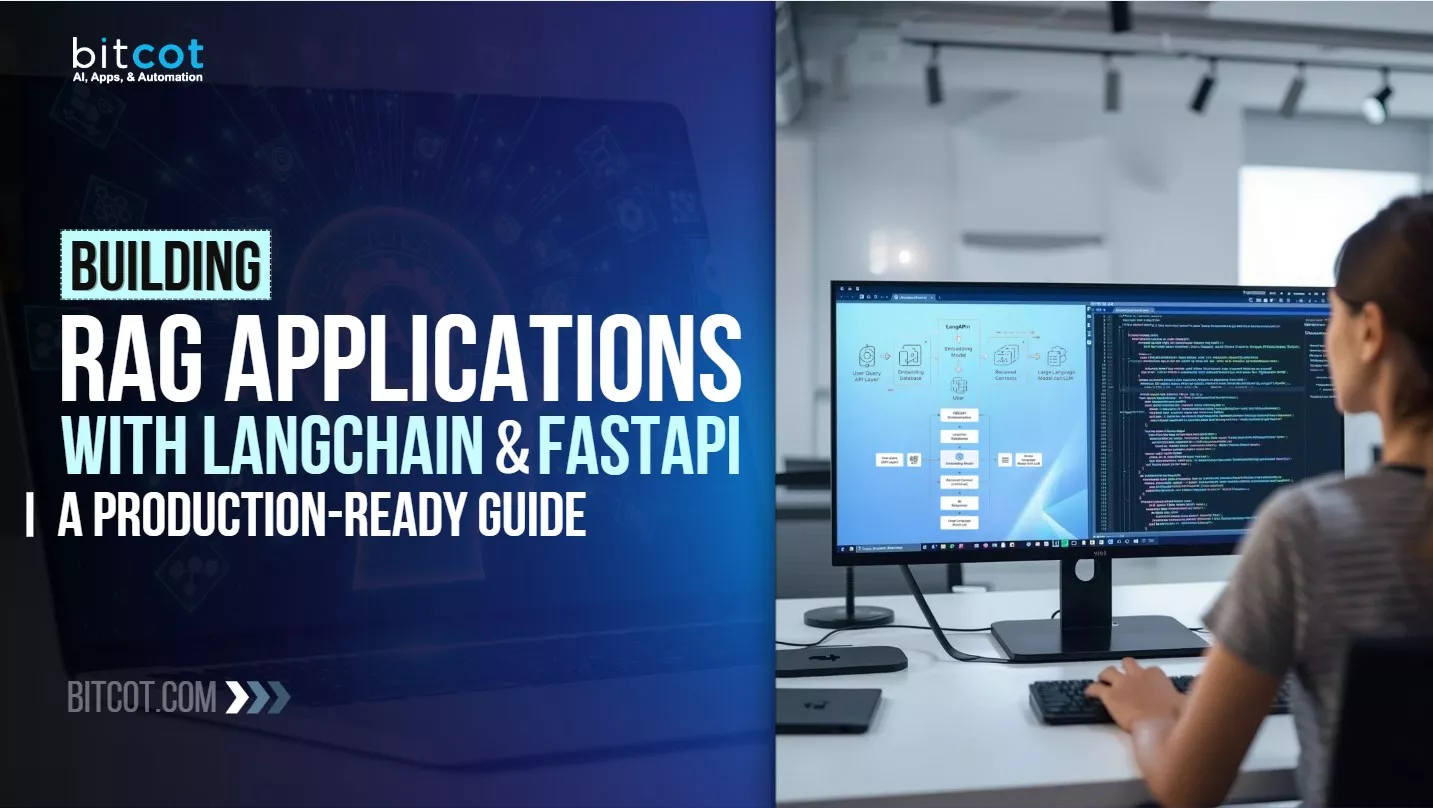

How to Build RAG Applications with LangChain and FastAPI

Building a production-ready RAG system is a strategic journey that moves from initial discovery to a globally scalable API.

To ensure an organization captures real business value while maintaining strict security, this multi-phase roadmap serves as the definitive blueprint for implementation.

Phase 1: Discovery and Strategic Alignment (Weeks 1-4)

Before a single line of code is written, the focus is on ROI Alignment. A project without defined success metrics is a research experiment, not a business initiative.

- Use Case Prioritization: Identify high-friction workflows, such as technical support deflection or legal contract synthesis, where manual research time is currently a major cost driver.

- The Knowledge Audit: Evaluate the state of internal data. Since enterprise data is often fragmented across wikis, PDFs, and emails, this phase identifies which repositories are the “authoritative sources of truth.”

- KPI Benchmarking: Establish metrics like “Reduction in Resolution Time” or “Accuracy Rate.” This ensures the project remains tied to a clear business case rather than technical hype.

Phase 2: Institutional Knowledge Ingestion (Months 2-3)

The foundation of enterprise RAG is Knowledge Governance. This phase transforms raw storage into a high-fidelity intelligence pipeline.

- Semantic Intelligence Partitioning: Traditional systems break data into random blocks, losing the context of a legal clause or financial insight. Semantic Chunking ensures the system recognizes where a topic starts and ends, allowing the AI to understand a document as a coherent whole.

- Dynamic Security Enrichment: Metadata-driven access control is implemented here. By tagging data with security levels (e.g., “Executive Only”), the system automatically filters what it “sees” based on the user’s credentials, respecting existing corporate hierarchies and data privacy mandates.

Phase 3: Precision Architecture & Orchestration (Months 3-6)

This phase bridges the “Trust Gap” by ensuring the AI is consistently accurate and governed by business logic.

- Hybrid Intelligence Search: A combination of Semantic Vector Search (finding meaning) and Lexical Keyword Search (finding exact names or part numbers) is used to ensure the system retrieves the absolute truth.

- Strategic Re-ranking: A Cross-Encoder Re-ranker acts as an automated fact-checker, evaluating the top search results for relevance. It ignores “noise” and only presents high-signal information to the AI, the most effective way to eliminate hallucinations.

- Operational Guardrails: Deterministic Prompting replaces “creative” AI behavior with disciplined business logic. This mandates that the AI cites its sources and adheres to a professional brand voice, ensuring every interaction is a safe representation of the company.

Phase 4: Delivery, Scaling, and ROI Monitoring (Month 6+)

The final phase focuses on global performance, turning AI logic into a fast, secure, and measurable web service.

- Asynchronous High-Performance Delivery: Using FastAPI, a system is built to handle thousands of global users simultaneously. High latency is a primary killer of user adoption; this architecture ensures that “intelligence” never comes at the cost of “efficiency.”

- Integrated Production Security: The AI is bridged directly into existing Single Sign-On (SSO) and security infrastructure. Proprietary data remains within an encrypted perimeter, fully compliant with global standards like SOC2 or GDPR.

- Strategic Observability: Enterprise Monitoring is integrated to track the ROI of every query. A leadership dashboard monitors accuracy rates, latency, and “Cost-per-Insight,” providing the transparency needed to justify long-term AI investment.

Building a RAG application is less about AI hype and more about organizing your knowledge, applying the right controls, and embedding AI into real business workflows.

| Phase | Primary Goal | Stakeholder Focus | Key Risk Mitigated |

| Discovery | Business Case | CEO / CFO | Avoids “Pilot Purgatory” |

| Ingestion | Data Governance | Data Stewards | Protects Proprietary Privacy |

| Precision | Accuracy & Trust | CTO / Legal | Eliminates Hallucinations |

| Scaling | Global Integration | IT Operations | Prevents System Downtime |

Real-World Use Cases of Production RAG Applications in the Enterprise

In 2026, the value of a RAG application is no longer measured by its ability to “chat with a PDF.” Instead, enterprise leaders measure success through time-to-insight and operational de-risking.

When built on a production-ready stack like LangChain and FastAPI, RAG shifts from a novelty to a core utility.

Below are five high-impact, real-world applications where this architecture is currently driving measurable ROI across the enterprise.

1. Financial Services: Real-Time Regulatory Intelligence

In the highly regulated world of finance, the cost of acting on outdated information can result in millions of dollars in fines.

- The Business Challenge: An investment bank needs to synthesize thousands of daily updates from agencies like the SEC and ESMA to ensure compliance.

- The Production Solution: Utilizing Hybrid Search, the system retrieves exact regulatory clauses (Lexical search) while understanding the intent of new mandates (Semantic search). FastAPI’s asynchronous streaming allows compliance officers to receive synthesized summaries of 500-page filings in seconds.

- The Strategic Impact: A 30% reduction in compliance review cycles and a significant decrease in “Regulatory Drift” by ensuring the AI is grounded in the morning’s latest filings.

2. Healthcare & Pharma: Accelerated Clinical Insights

Pharma companies deal with voluminous documentation where accuracy is a life-or-death requirement.

- The Business Challenge: Regulatory affairs specialists spend thousands of hours scanning FDA/EMA guidelines to draft submission documents and stability testing requirements.

- The Production Solution: A RAG system grounded in approved clinical content and SOPs. Using advanced reranking, the system provides evidence-grounded answers to complex queries like “What are the stability testing requirements for a biologic in an NDA submission?”

- The Strategic Impact: Early pilots show over 65% efficiency gains in adverse event case processing and up to 90% accuracy in data extraction from clinical study reports.

3. Manufacturing & Supply Chain: Institutional Knowledge Recovery

As a senior workforce nears retirement, manufacturers face a “Knowledge Gap” that threatens operational continuity.

- The Business Challenge: Capturing decades of “unwritten” expertise in plant operations and troubleshooting complex machinery.

- The Production Solution: RAG applications act as autonomous shift handover tools. By ingesting decades of incident reports and maintenance logs, the system provides junior field engineers with “expert-level” troubleshooting steps.

- The Strategic Impact: A 40% deflection of Tier-1 support tickets and increased production uptime through autonomously generated work instructions.

4. Retail & eCommerce: Context-Aware Conversational Commerce

Basic chatbots often frustrate customers with generic answers. Production RAG turns support into a sales engine.

- The Business Challenge: A retailer needs to provide personalized product recommendations that respect live inventory levels and seasonal trends.

- The Production Solution: Integrating RAG with live content platforms. The system pulls real-time data on browsing history and stock levels to answer: “Is this jacket available in my size in the London store, and what do the latest reviews say about its fit?”

- The Strategic Impact: Higher conversion rates and a dramatic reduction in product returns due to more accurate pre-purchase information.

5. HR & Internal Operations: The “Single Source of Truth”

Information silos are the primary cause of employee “search fatigue,” where staff spend up to 20% of their day just looking for information.

- The Business Challenge: New hires and current staff struggle to navigate fragmented HR policies, security protocols, and benefit plans across different regions.

- The Production Solution: An internal “Knowledge Assistant” using metadata-driven access control. This ensures that a UK-based employee only sees benefits relevant to their region and contract type.

- The Strategic Impact: Onboarding ramp-up time is cut from months to weeks, and HR departments see a massive reduction in repetitive email inquiries.

| Metric | Basic/Demo RAG | Production-Ready RAG (LangChain + FastAPI) |

| Response Latency | 10-15 Seconds | < 2 Seconds (with Streaming) |

| Hallucination Rate | 15-20% | < 1% (with Re-ranking/Guardrails) |

| Data Recency | Static (Months old) | Real-time (Minutes old) |

| Traceability | None (Hidden sources) | Full (Direct Source Citations) |

Partner with Bitcot to Build Your Custom Production RAG Application

Building a “cool” RAG demo is a weekend project; building a production-ready system that scales with your enterprise is a specialized engineering feat.

As a leader in AI development, Bitcot specializes in bridging the gap between experimental AI and high-impact business tools. We help organizations move from AI experimentation to scalable, business-grade AI systems that deliver measurable impact.

Our expertise includes:

- Designing secure knowledge architectures

- Integrating AI with existing enterprise systems

- Building scalable APIs and backend services

- Implementing access control and compliance safeguards

- Optimizing AI systems for cost, speed, and accuracy

If your organization is ready to move past pilot programs and deploy a robust, secure RAG engine, Bitcot provides the strategic and technical expertise to make it happen.

The Bitcot Advantage: Product-First AI

Many firms approach AI as a research project. Bitcot approaches it as a business product. We don’t just build models; we build integrated solutions that fit into your existing roadmap and user workflows.

- Custom Framework Expertise: While we utilize best-in-class tools like LangChain and FastAPI, we specialize in custom-coding the logic that makes your application unique.

- Startup Speed, Enterprise Discipline: We leverage our proprietary GenAI Accelerator to move from concept to prototype in as little as a week, without sacrificing the clean architecture and security required for long-term scale.

- Vertical-Specific Solutions: Whether you are in fintech, healthcare, or manufacturing, we understand the specific compliance and data nuances of your industry.

How We Transform Your RAG Strategy

| Our Service | Strategic Business Outcome |

| High-Precision Data Ingestion | We implement semantic partitioning and metadata tagging to ensure 99% accuracy in information retrieval. |

| Enterprise Security & SSO | We bridge your AI directly into your corporate security infrastructure, ensuring data privacy and SOC2/GDPR adherence. |

| Observability & Monitoring | We deploy real-time dashboards that track accuracy, latency, and Cost-per-Query, giving leadership full transparency on ROI. |

| Asynchronous Scaling | Using FastAPI, we build systems that handle thousands of concurrent users with sub-second response times. |

The window for being “early” to AI is closing. In 2026, the companies that win will be those that have successfully moved their proprietary knowledge out of static PDFs and into dynamic, RAG-powered engines.

Whether you need an intelligent enterprise co-pilot, a technical support engine, or a secure internal knowledge base, Bitcot has the talent and the blueprint to deliver.

Final Thoughts

The novelty of “talking to your documents” has worn off. In today’s market, a RAG application that is slow, hallucination-prone, or disconnected from your core business logic isn’t just a tech failure; it’s a missed opportunity to lead.

Moving from a basic script to a production-ready engine is a journey that requires more than just code. It requires a mindset shift from “What can this AI do?” to “How can this AI reliably serve my customers and employees at scale?”

By choosing a high-performance stack like LangChain and FastAPI, you aren’t just building a tool; you are building the future nervous system of your company.

It can feel overwhelming to navigate the gap between a promising pilot and a global rollout. You have to worry about data privacy, latency, and keeping costs predictable while the technology itself seems to change every other week. But that is exactly where the biggest competitive advantages are won.

The organizations that commit to professional, scalable architecture today are the ones that will be running circles around their competition tomorrow.

If you’re ready to stop experimenting and start deploying, you don’t have to go it alone. Whether you are looking to automate complex legal reviews, scale your technical support, or unlock years of siloed institutional knowledge, we are here to help you bridge that gap.

At Bitcot, we specialize in turning complex data challenges into seamless digital products. Our team combines deep technical expertise with a product-first approach to deliver high-impact custom RAG development services tailored to your specific business goals.

Are you ready to transform your proprietary data into a revenue-driving asset?

Connect with Bitcot today to start your journey toward a production-ready RAG solution.