We’ve all seen the “magic” of AI.

Maybe your team has built a few internal prototypes or experimented with basic chatbots. But there is a massive, expensive chasm between a cool demo and a production-ready agent that can actually be trusted with your customers and your data.

In the business world, “it mostly works” isn’t good enough. If an AI agent is handling your supply chain queries or assisting your sales team, it needs to be three things: reliable, secure, and fast.

Most AI projects stall because they lack a robust foundation. They work fine in a controlled test, but crumble when faced with high traffic or complex, real-world requests. To turn AI into a genuine value-driver, you need a professional-grade architecture.

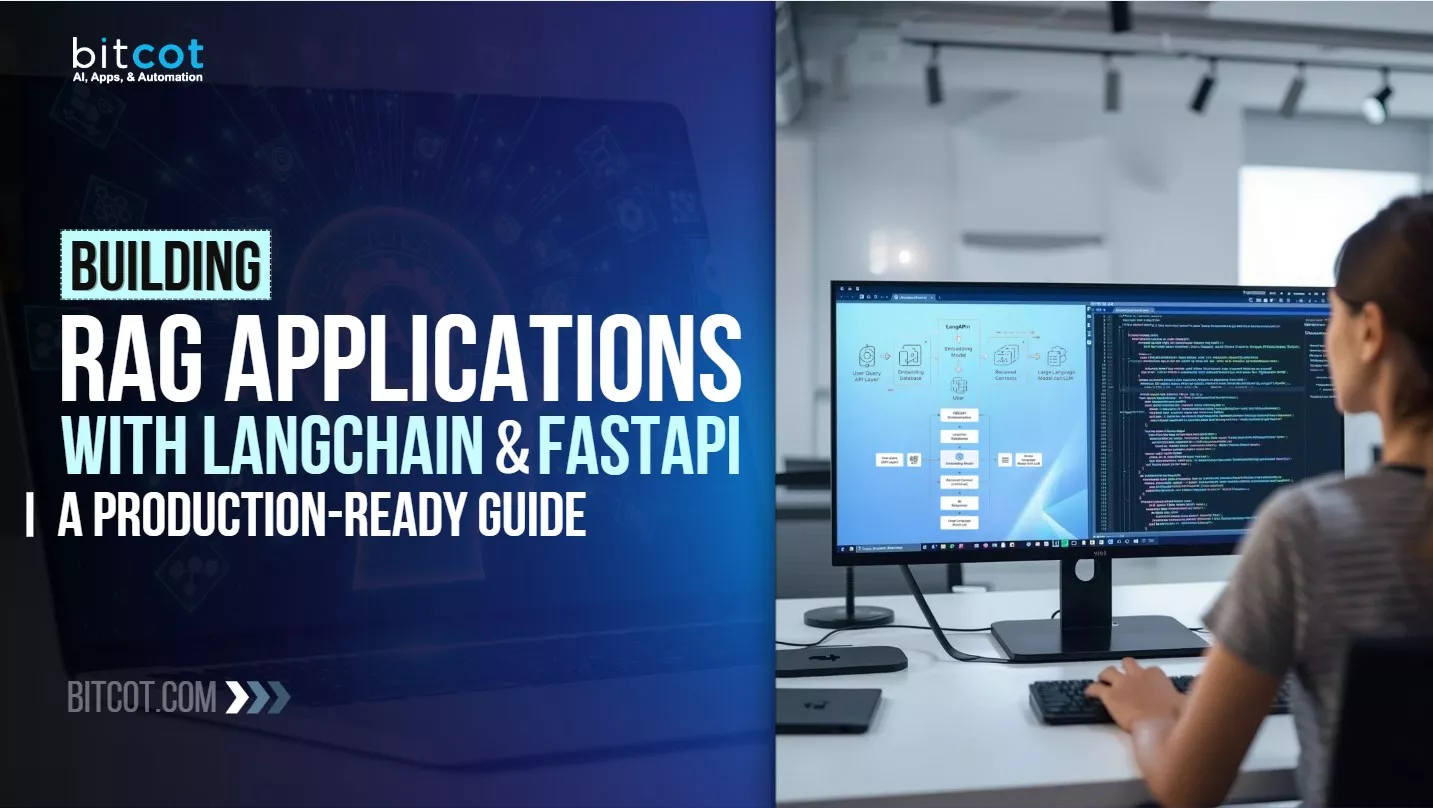

In this guide, we explore the industry-standard “Power Couple” for AI deployment: LangChain and FastAPI.

- LangChain acts as the “brain” of your operation, allowing your AI to use tools, remember past conversations, and follow complex business logic.

- FastAPI is the “engine” that delivers that brain to the world, ensuring the system stays online, responds instantly, and integrates seamlessly with your existing enterprise software.

If you are looking to move AI from a “research project” to a “revenue generator,” you have to solve for the “Boring-but-Vital” stuff:

- Predictability: Ensuring the agent follows your brand guidelines every single time.

- Scalability: Handling 10,000 users as smoothly as you handle ten.

- Security: Protecting proprietary data while still giving the AI the context it needs to be helpful.

- Cost Efficiency: Using the right model for the right task so you aren’t overspending on compute power.

Whether you’re a CTO looking to modernize your stack or a Product Manager trying to ship a reliable feature, this breakdown will show you how to build AI that doesn’t just talk, but actually works.

Key Takeaways: The Production AI Roadmap for 2026

- The Reliability Shift: Moving from prototypes to production requires a transition from “mostly works” to three pillars of operational excellence: reliability, security, and speed. Success is defined by an agent working correctly 10,000 times in a row, not just once in a demo.

- The “Power Couple” Stack: LangChain acts as the “brain,” handling reasoning and tool usage , while FastAPI serves as the “engine,” providing an async-first architecture to handle high-concurrency traffic without performance lag.

- Architectural Guardrails: To mitigate “hallucinations” and security risks like prompt injection, production agents must use Pydantic for rigid data validation and LangGraph for stateful loops that allow the AI to self-correct from errors.

- Strategic Governance: Implementing Human-in-the-Loop (HITL) gates allows for the automation of 90% of a task while requiring a human signature for high-value actions, effectively balancing automation with risk management.

- Quantifiable ROI: Modern agentic workflows deliver measurable financial impact, including up to a 20% reduction in expedited shipping costs for logistics and a 60% reduction in Tier-1 support tickets for customer experience teams.

- Future-Proofing: A modular, model-agnostic design eliminates vendor lock-in, allowing enterprises to switch LLM providers (e.g., OpenAI to Anthropic) in minutes, reducing future re-engineering costs by up to 40%.

What Makes an AI Agent Production-Ready?

In a laboratory or a demo, an AI agent only needs to work once to be considered a success. In a business environment, it needs to work correctly ten thousand times in a row.

Moving from a prototype to a production-ready system means shifting your focus from “intelligence” to “reliability.”

To be truly enterprise-grade, an agent must move beyond a simple chat interface and meet four specific pillars of operational excellence.

Deterministic Guardrails in a Probabilistic World

LLMs are probabilistic by nature; they don’t always give the same answer twice. A production-ready agent uses structured workflows to ensure that while the AI handles the conversation creatively, the business logic remains rigid.

This means implementing “kill switches” for infinite loops and validation layers that check an agent’s output against your company’s Standard Operating Procedures (SOPs) before the user ever sees it.

Radical Observability and Tracing

When a standard software program crashes, you get an error code. When an AI agent fails, it might simply give a very polite, very wrong answer. Production systems require full-stack tracing. You need to see the “thought process” of the agent:

- Which specific tool did it choose?

- What was the exact prompt sent to the model?

- How much did that specific interaction cost in tokens? Without this visibility, debugging a “hallucination” becomes an expensive game of guesswork.

Memory with a Purpose

A prototype often has “goldfish memory”; it forgets everything the moment the window closes. A production agent requires a sophisticated state management system.

This involves more than just saving a chat log; it means distinguishing between short-term session context and long-term user preferences, all while staying within the “context window” limits of the AI to prevent performance lag.

Security as a Foundation, Not an Add-on

In production, your agent is a representative of your brand with access to your internal tools.

This introduces unique risks, such as prompt injection (where a user tries to “trick” the AI into ignoring its instructions). A robust agent must operate on the principle of Least Privilege Access, meaning it only has the exact permissions it needs to perform its job and nothing more.

Why Simple Chatbots Fail in Real-World Use Cases

Many businesses assume that “custom-built” is synonymous with “production-ready.” They hire a developer to write a custom Python wrapper around an LLM and assume the job is done.

However, there is a massive difference between a standalone script and a production-ready architecture. A simple custom-coded chatbot, even one built from scratch, will almost always hit a “sophistication ceiling.”

Here is why a simple chatbot isn’t enough for a real-world business.

1. The “Logic Spaghetti” Problem

When you build a basic custom chatbot, you usually hard-code the instructions. As soon as you want that bot to do something complex, like check a database only if the user asks about an order, but search a PDF if they ask about a policy, your code starts looking like a bowl of spaghetti.

- How LangChain solves this: It provides Chains and Agents. Instead of writing “if/else” statements for every scenario, LangChain allows the AI to dynamically decide which tool to use. It turns your bot from a fixed flowchart into a reasoning engine that can handle “unscripted” business logic.

2. The Bottleneck of Synchronous Processing

A standard Python script usually processes one thing at a time. If User A asks a complex question that takes 10 seconds to answer, User B (and the rest of your server) might be stuck waiting.

- How FastAPI solves this: FastAPI is built on asynchronous logic and designed to handle thousands of “waits” simultaneously. While your AI is “thinking” or fetching data from a database, FastAPI is already moving on to serve the next customer. This is the difference between a smooth user experience and a spinning loading wheel that kills conversion rates.

3. Brittle Tool Connections

In a simple custom bot, every time you want to connect to a new piece of software (like your CRM or a Calendar), you have to write custom integration code. If that software changes its API, your bot breaks.

- The LangChain Advantage: It offers a standardized “Tool” interface. You aren’t writing one-off integrations; you are using a modular ecosystem. If you want to swap your database from PostgreSQL to Pinecone, or your model from OpenAI to Anthropic, you can do it in minutes rather than weeks of rewriting code.

4. Lack of “System-Level” Validation

A basic custom script often lacks data validation. If a user sends a weirdly formatted request or a malicious prompt, a simple script might crash or leak internal data.

- The FastAPI Advantage: It uses Pydantic for rigorous data validation. Every piece of information entering or leaving your AI agent is checked against a strict schema. This ensures that your system doesn’t just “work”; it stays secure and predictable under the pressure of real-world traffic.

5. Memory Decay

A basic custom bot usually just appends the whole chat history to every new message. Very quickly, this becomes too expensive (token costs) or too long for the AI to “read,” causing it to get confused or “hallucinate.”

- The LangChain Advantage: It has sophisticated Memory Management. It can automatically summarize old parts of the conversation, store long-term “facts” in a separate database, and only show the AI what it needs to see. This keeps your costs down and your AI’s “brain” sharp over long-term customer relationships.

That’s why modern AI applications are moving toward agent-based architectures, systems that combine language models with memory, tools, structured reasoning, and strong backend engineering. Instead of just generating text, these agents can plan, act, recover from errors, and integrate deeply with the rest of your software stack.

| Feature | LangChain + FastAPI | Flask / Django |

| Concurrency | High (Async native) | Limited (Sync by default) |

| AI Tooling | Built-in integrations | Requires custom wrappers |

| Schema Validation | Automatic (Pydantic) | Manual / Plug-in based |

| Developer Speed | High (Pre-built components) | Moderate (Build from scratch) |

Benefits of Using LangChain and FastAPI for Enterprise AI Agents

Once you move beyond basic chatbots and start thinking about production-grade AI agents, the question quickly becomes: What stack actually supports this at an enterprise level?

You need more than a model wrapper. You need orchestration, structure, observability, and an API layer that plays nicely with the rest of your infrastructure.

That’s where LangChain and FastAPI form a seriously powerful combo.

By 2026, the novelty of “talking to a computer” has faded, and businesses are now demanding ROI. To achieve this, the technical architecture must support sophisticated business requirements that a basic script simply cannot handle.

Using LangChain and FastAPI together creates a synergy that directly addresses the core needs of an enterprise: scalability, flexibility, and a high-quality user experience.

Here is why this specific stack is the preferred choice for enterprise architects.

Modular Orchestration with LangChain

LangChain serves as the “brain” of the agent. Its modularity allows enterprises to build resilient systems with AI agents that are not locked into a single provider or logic flow.

The framework encourages a composable architecture: prompts, tools, memory, retrievers, and chains are all separate components. This modularity is gold in enterprise settings, where different teams may own different parts of the system and where components must evolve independently.

- Model Agnostic Design: Enterprises can switch between providers like OpenAI, Anthropic, or internal open-source models with a single line of code.

- Advanced Memory Management: LangChain provides sophisticated context-retrieval systems (RAG) that allow agents to stay grounded in company-specific facts rather than general internet data.

- Complex Workflows: Tools like LangGraph enable stateful, reliable logical paths, ensuring the agent follows company SOPs (Standard Operating Procedures).

Business Benefit: Flexibility to pivot between AI models as the market changes without rebuilding the entire system.

ROI: Future-proofs your investment by eliminating vendor lock-in and reducing future re-engineering costs by up to 40%.

High-Performance Delivery with FastAPI

If LangChain is the brain, FastAPI is the nervous system. It handles the communication between the AI logic and the rest of the enterprise ecosystem.

FastAPI is built for high-performance, async-first Python services, exactly what you want when model calls and tool invocations can be slow or unpredictable.

- Async-First Architecture: AI agents take time to “think.” FastAPI manages thousands of concurrent users without blocking, even while waiting for an AI response.

- Automatic Type Safety: By using Pydantic, FastAPI ensures every piece of data matches a strict schema, preventing “hallucinated” AI outputs from breaking internal databases.

- Instant Documentation: FastAPI automatically generates Swagger (OpenAPI) documentation, allowing other internal engineering teams to integrate the AI agent instantly.

Business Benefit: Industrial-strength stability that ensures the AI agent can handle real-world traffic spikes without crashing.

ROI: Lower infrastructure overhead and faster internal adoption, reducing the “integration tax” usually paid when connecting new software to old systems.

Enterprise-Grade Security and Scaling

Beyond the code, this stack simplifies the “Day 2” operations of running an agent at scale within a regulated environment.

- Standardized Security: FastAPI makes it easy to implement OAuth2 and fine-grained access control, ensuring a “Marketing Agent” cannot access sensitive “HR” data.

- Observability with LangSmith: This provides a full audit trail. You can see exactly which tool the agent used and why it made a specific decision.

- Streaming for User Experience: Support for Server-Sent Events (SSE) allows LangChain to stream the agent’s “thoughts” to the user in real-time, making the app feel instantaneous.

Business Benefit: Enhanced data governance and a superior user experience that drives high internal adoption.

ROI: Mitigates the massive financial and reputational risks of data leaks while increasing employee output through a more responsive tool.

Advanced Memory and Personalization

In an enterprise, an agent that “forgets” is a liability. This stack allows for sophisticated persistence.

Enterprises care deeply about continuity, cross conversations, sessions, and users. LangChain supports different memory patterns, from short-term conversational memory to long-term, persistent context stored in databases or vector stores.

- Session Persistence: Remembers user preferences and past issues across multiple days, not just a single chat session.

- Vector Memory: Allows the agent to “search” through its own history of millions of past interactions to find relevant solutions.

Business Benefit: Dramatically improves customer and employee satisfaction by providing a personalized, “human-like” continuity.

ROI: Higher user retention and a 15% reduction in support ticket resolution time through better context awareness.

Multi-Agent Collaboration

Enterprise tasks are often too big for one AI. This stack enables a “Manager” agent to delegate tasks to “Specialist” agents.

- Task Delegation: A “Research Agent” can find data while a “Drafting Agent” writes the report and a “Compliance Agent” checks for risks.

- Cross-Departmental Utility: The same infrastructure can host multiple specialist agents for HR, Legal, and Marketing simultaneously.

Business Benefit: Enables the automation of complex, multi-step business processes that basic chatbots cannot handle.

ROI: Multiplies workforce output; organizations report up to 5x ROI within the first year by automating high-value internal workflows.

Built-in Observability and Compliance

Enterprise AI requires a “paper trail.” You cannot deploy a “black box” in a regulated industry.

- Full Traceability: Tools like LangSmith (integrated with LangChain) allow you to see exactly which data source led to a specific AI answer.

- Access Control: FastAPI makes it easy to implement OAuth2, ensuring agents only access data that the specific user is authorized to see.

Business Benefit: Provides the governance and audit trails necessary to satisfy Legal, Security, and Compliance departments.

ROI: Avoids potential legal liabilities and regulatory fines; reduces the “compliance review” bottleneck for new AI projects by 50%.

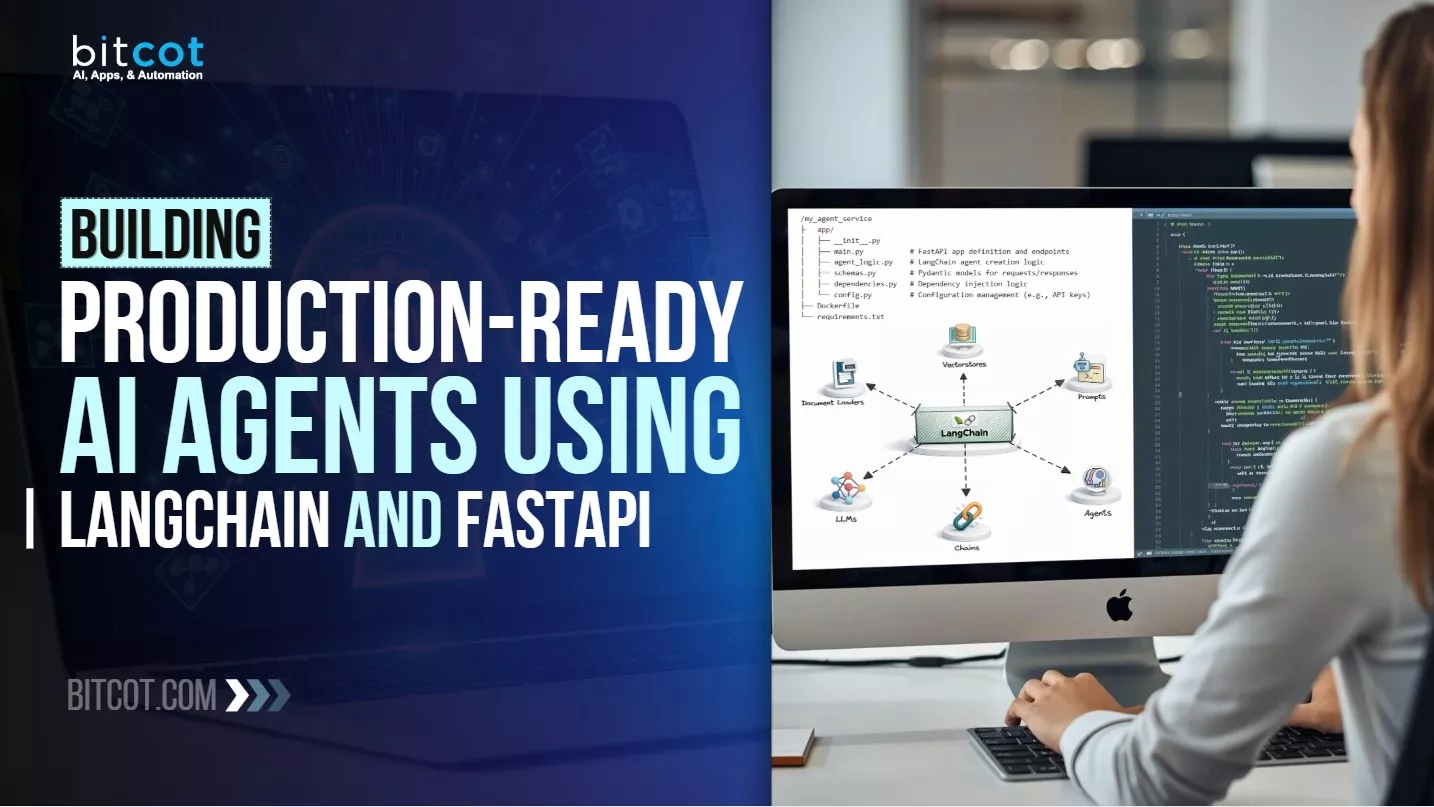

How to Build Production-Ready AI Agents Using LangChain and FastAPI

For a business executive in 2026, the value of an AI agent is measured not by its “intelligence,” but by its reliability, security, and ROI. Moving from a prototype to a production system requires shifting from a “chatbot” mindset to an “Autonomous Workflow” mindset.

Here is the strategic step-by-step roadmap for deploying production-ready AI agents using the LangChain and FastAPI stack.

Phase 1: Strategic Alignment & ROI Mapping

In 2026, “experimental” budgets have dried up. Executives now demand a clear Value-to-Token ratio.

- Identify High-Leverage Workflows: Don’t build a general assistant. Focus on multi-step processes where a human currently spends 20+ minutes (e.g., insurance claims processing, vendor contract analysis, or personalized sales routing).

- Establish Key Performance Indicators (KPIs):

- Deflection Rate: % of tasks completed without human intervention.

- Cost per Task: Compare the AI API cost vs. the human hourly rate.

- Accuracy Threshold: The minimum acceptable “Pass Rate” before the agent is allowed to go live.

Phase 2: Architecting for Governance (LangGraph)

Standard AI builds are “linear”; if they hit a snag, they fail. In 2026, we use LangGraph (within the LangChain ecosystem) to create Stateful Agentic Loops.

- The “State Machine” Advantage: Unlike a simple script, LangGraph allows the agent to maintain a “memory” of the entire transaction. If an API call to your CRM fails, the agent doesn’t quit; it reasons through the error and tries a different path.

- Human-in-the-Loop (HITL) Gates: This is your primary risk mitigation tool. You can program the agent to handle 90% of a task but automatically pause and wait for a human manager’s digital signature before executing high-value actions (like issuing a refund >$500).

Phase 3: Building the “Digital Nervous System” (FastAPI)

FastAPI is the industry standard for 2026 because it handles the high-concurrency needs of enterprise AI.

- Asynchronous Processing: AI models can take several seconds to “think.” FastAPI allows your server to handle thousands of other users simultaneously while waiting for that one AI response.

- Security & Validation (Pydantic): 2026 is the year of “Prompt Injection” attacks. FastAPI uses Pydantic to ensure that any data entering or leaving the AI follows a strict business schema. If the AI tries to output a “hallucinated” credit card number or unauthorized internal data, the system blocks it before it reaches the user.

Phase 4: Production Hardening & Cost Control

This is where 90% of AI projects fail. Hardening turns a script into a business asset.

The Observability Stack (LangSmith)

You cannot manage what you cannot see. LangSmith provides a full audit trail for every “thought” the agent has.

- Executive Benefit: If an agent makes a mistake, you have a “Black Box” recording to show exactly why, which is critical for legal and compliance audits.

For example, when a customer complains about an AI response, you can pull the “trace” and see exactly which prompt or data point caused the error. This is vital for compliance and legal protection.

Economic Optimization (Semantic Caching)

In 2026, API costs are a major OpEx line item.

- The Strategy: Implement a cache at the FastAPI layer. If a customer asks a question similar to one asked ten minutes ago, the system serves the “cached” answer for $0.00 instead of paying for a new LLM calculation.

Or if 100 employees ask “What is our 2026 holiday policy?”, the FastAPI layer should recognize the meaning (semantics) of the question and serve the answer from a cheap cache rather than paying $0.05 to a high-end model like GPT-5 every time.

Model Fallback Strategies

Don’t be a victim of provider downtime.

- The Setup: Configure your LangChain logic so that if your primary model (e.g., GPT-5) is lagging, the system automatically falls back to a faster, cheaper “Flash” model (like Claude Haiku or Gemini Flash) to keep your service running.

| Category | Budget Allocation | Why it’s Non-Negotiable |

| Logic (LangGraph) | 30% | Ensures the agent can self-correct and handle complexity. |

| Infrastructure (FastAPI) | 20% | Provides the speed and security required for enterprise scale. |

| Governance (HITL) | 25% | Mitigates legal risk by keeping humans in control of key decisions. |

| Observability (Tracing) | 25% | Crucial for debugging, auditing, and continuous improvement. |

Real-World Use Cases of Production AI Agents in the Enterprise

In 2026, the discussion around AI has shifted from “What can it say?” to “What can it accomplish?”

Leading enterprises are no longer building chatbots; they are deploying autonomous workflow agents that operate across siloed departments.

Here are the high-impact, real-world use cases where the LangChain + FastAPI stack is delivering measurable ROI this year.

Global supply chains are volatile. Enterprise agents now serve as 24/7 watchtowers that don’t just alert; they act.

- The Workflow: An agent monitors weather patterns and port congestion data via API.

- The Action: If a delay is predicted, the agent uses LangGraph to reason through alternatives (e.g., air vs. sea), queries the ERP for inventory levels, and drafts a re-routing proposal.

- The FastAPI Role: It provides the high-speed interface to pull live telemetry from IoT sensors on shipping containers.

- ROI: 15-20% reduction in “expedited shipping” costs by catching delays 48 hours earlier than human teams.

2. Finance: Automated CAPEX & Budget Optimization

Large-scale Capital Expenditure (CAPEX) reviews used to take months of spreadsheet gymnastics.

- The Workflow: Regional managers email budget requests. An agent (powered by LangChain) parses the unstructured text, extracts financial figures, and compares them against the 2026 strategic roadmap.

- The Action: The agent runs a linear programming model (via a FastAPI microservice) to suggest an optimized portfolio of projects that maximizes ROI within the current budget ceiling.

- ROI: Transitioning from “periodic reviews” to “continuous optimization,” allowing firms to reallocate capital in weeks rather than fiscal years.

3. Human Resources: The “Onboarding Orchestrator”

In the frontline workforce (logistics, retail, healthcare), speed to hire is the primary competitive advantage.

- The Workflow: Multi-agent systems (using CrewAI or LangGraph) coordinate the entire candidate journey. One agent screens resumes, another performs sentiment analysis on video intros, and a third generates legal documents.

- The Action: A logistics firm used this to staff a new fulfillment center, cutting the “hiring-to-onboarded” time from 14 days to 72 hours.

- The FastAPI Role: Validates and secures sensitive PII (Personally Identifiable Information) before it ever touches the LLM brain.

4. Healthcare: Prior Authorization Agents

Insurance paperwork is the “undifferentiated toil” of the medical world.

- The Workflow: An agent receives a doctor’s request for a specialized procedure. It uses RAG (Retrieval-Augmented Generation) to compare the request against thousands of pages of insurance policy logic.

- The Action: It identifies missing documentation, automatically pings the doctor’s office for the correct lab results, and submits a completed “pre-approved” packet to the insurer.

- ROI: 40% reduction in administrative overhead for clinics and faster care delivery for patients.

5. Retail: Semantic Inventory & Pricing

Standard pricing engines are “reactive.” 2026 agents are “proactive.”

- The Workflow: Agents monitor competitor pricing and social media trends in real-time.

- The Action: If a product goes viral on a niche platform, the agent adjusts the dynamic pricing on the website (within pre-approved FastAPI guardrails) and triggers an immediate purchase order to suppliers to prevent a stock-out.

- ROI: Increased “Capture Rate” during market spikes without needing 24/7 human market analysts.

| Industry | Primary Agent Task | Business Impact |

| Manufacturing | Predictive Maintenance & Parts Ordering | 30% reduction in unplanned downtime. |

| Legal | Contract Compliance & Deviation Analysis | 80% faster “first-pass” legal reviews. |

| Customer Experience | Cross-Channel Intent Routing | 60% reduction in Tier-1 support tickets. |

| Energy | Grid Load Balancing & Trading | Optimized energy arbitrage in volatile markets. |

Partner with Bitcot to Build Your Custom Production AI Agent

Building an enterprise-grade AI agent is a high-stakes engineering feat.

While the roadmap is clear, execution requires a partner who understands the nuance of governance, integration, and performance.

Bitcot is an award-winning AI development firm specializing in transforming complex business requirements into production-ready agentic workflows. In 2026, we help organizations skip the “pilot purgatory” and move directly to measurable ROI.

Why Bitcot is the Partner of Choice for 2026

We don’t just build chatbots; we architect agentic ecosystems. Our approach combines the rapid development of low-code tools with the “no-limits” power of custom Python frameworks like LangChain and FastAPI.

1. The 8-12 Week Launchpad

While internal teams often take 12-18 months to navigate the complexities of AI infra, Bitcot’s proven 5-phase process delivers a Minimum Viable Product (MVP) in 8 to 12 weeks.

- Rapid Validation: We provide a working prototype in as little as 2-3 weeks.

- Startup Speed, Enterprise Discipline: We move fast, but within your existing security and compliance frameworks.

2. Vertical-Specific Expertise

We don’t believe in “one-size-fits-all” AI. We build agents tailored to the unique logic of your industry:

- Healthcare: HIPPA-grade triage and clinical documentation agents.

- Finance: Agents for automated CAPEX reviews and fraud detection.

- Supply Chain: Real-time logistics navigators that connect directly to your ERP and IoT data.

3. Deep Technical Mastery of the 2026 Stack

Our engineers are “LLM-native.” We specialize in the exact technologies discussed in this guide:

- LangGraph Orchestration: Designing agents that can self-correct and reason through complex, multi-step tasks.

- FastAPI & Pydantic: Building the high-performance “nervous system” with zero-trust security and rigid data validation.

- Observability & Governance: Implementing LangSmith and Langfuse to ensure every AI action is auditable, explainable, and safe.

| Model | Ideal For | What You Get |

| Process Assessment | Discovery Phase | A 2-week evaluation identifying your top 3 high-ROI AI opportunities. |

| Pilot Program | Proving Value | A focused, 10-week build of a single high-impact agent to prove ROI. |

| Dedicated Team | Scaled Transformation | A full-stack squad of AI engineers and product leads to build your enterprise-wide AI roadmap. |

The gap between companies that use AI and companies that deploy AI agents is the new competitive frontline. Don’t leave your 2026 strategy to chance.

Final Thoughts

Building an AI agent in 2026 isn’t just about the code; it’s about a fundamental shift in how we think about work. We are moving from a world where we “use” software to a world where we “collaborate” with it.

The LangChain and FastAPI stack is powerful, but the real magic happens when you align that tech with your unique business intuition. It’s okay to start small.

In fact, some of the most successful enterprise agents we’ve seen started as a simple solution to one annoying, repetitive task. From there, they grew into the robust, self-correcting systems that now save those companies thousands of hours and millions of dollars.

The “Wait and See” era of AI is officially over. The tools are mature, the security is enterprise-grade, and the ROI is no longer theoretical; it’s visible on the balance sheet. Your competitors are likely already experimenting with these agentic loops, and the gap between those who adapt and those who stall is widening every day.

You don’t have to navigate this transition alone. At Bitcot, we live and breathe this stuff. We know how to turn a complex, messy business problem into a streamlined, automated success story. Explore our custom AI agent development services and let’s turn your vision into a production-ready reality.

If you’re ready to stop talking about AI and start deploying it, we’re here to help you cross the finish line with confidence. Get in touch with our team.